The problem of achieving superior performance in robotic task planning has been addressed by researchers from Tsinghua University, Shanghai Artificial Intelligence Laboratory, and Shanghai Qi Zhi Institute by introducing Vision-Language Planning (VILA). VILA integrates vision and language understanding, using GPT-4V to encode profound semantic knowledge and solve complex planning problems, even in zero-shot scenarios. This method allows for exceptional capabilities in open-world manipulation tasks.

The study explores advancements in LLMs and the growing interest in expanding vision-language models (VLMs) for applications like visual question answering and robotics. It categorizes the application of pre-trained models into vision, language, and vision-language models. The focus is leveraging VLMs’ visually grounded attributes for addressing long-horizon planning challenges in robotics, revolutionizing high-level planning with commonsense knowledge. VILA, powered by GPT-4V, stands out for its excellence in open-world manipulation tasks, showcasing effectiveness in everyday functions without requiring additional training data or in-context examples.

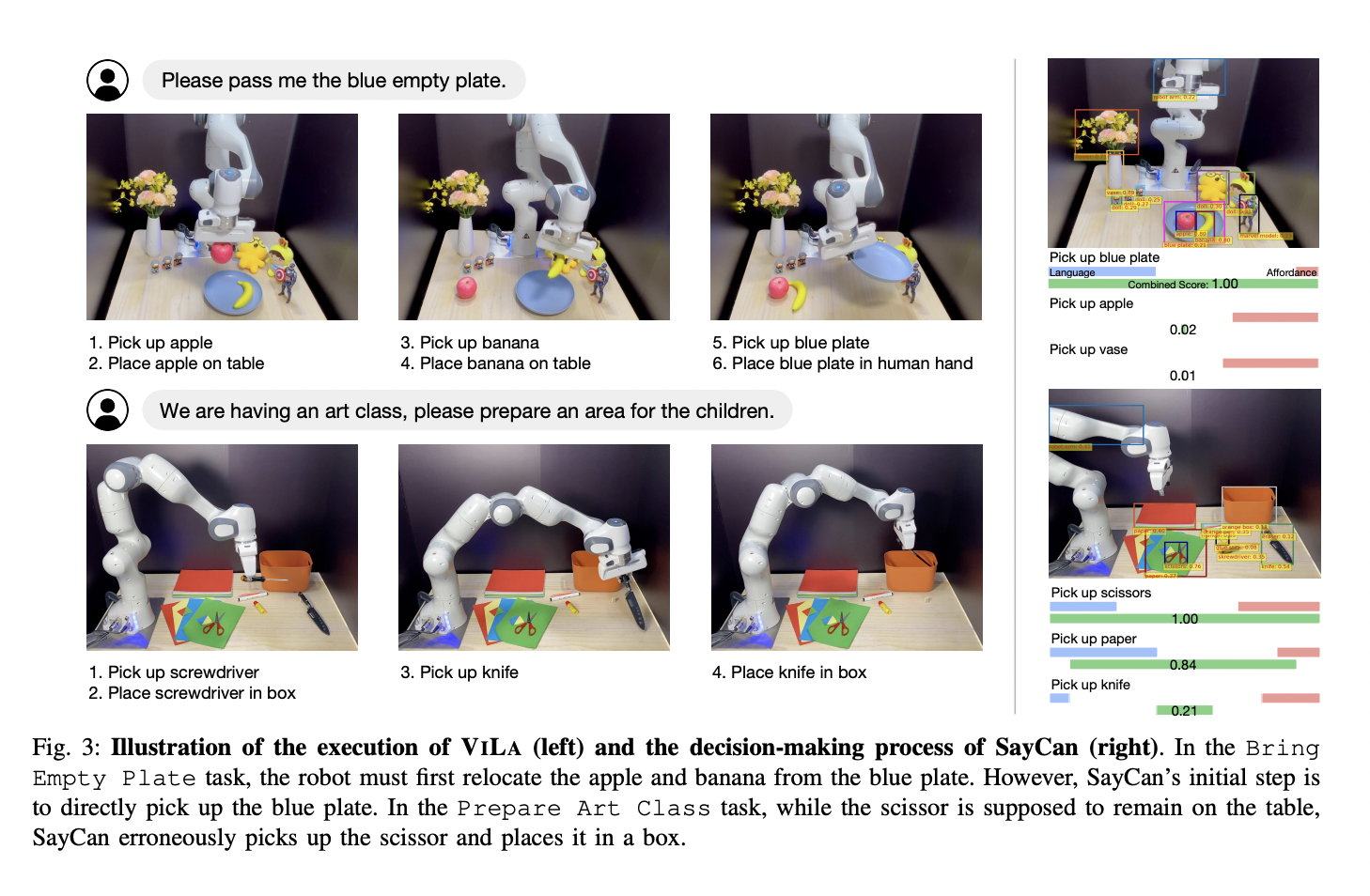

Scene-aware task planning, a key facet of human intelligence, relies on contextual understanding and adaptability. While LLMs excel at encoding semantic knowledge for complex task planning, their limitation lies in the need for world grounding for robots. Addressing this, Robotic VILA is an approach integrating vision and language processing. Unlike prior LLM-based methods, VILA prompts VLMs to generate actionable steps based on visual cues and high-level language instructions, aiming to create embodied agents, like robots, capable of human-like adaptability and long-horizon task planning in diverse scenes.

VILA is a planning methodology employing vision-language models as robot planners. VILA incorporates vision directly into reasoning, tapping into commonsense knowledge grounded in the visual realm. GPT-4V(ision), a pre-trained vision-language model, is the VLM for task planning. Evaluations in real-robot and simulated environments showcase VILA’s superiority over existing LLM-based planners in diverse open-world manipulation tasks. Unique features include spatial layout handling, object attribute consideration, and multimodal goal processing.

VILA outperforms existing LLM-based planners in open-world manipulation tasks. It excels in spatial layouts, object attributes, and multimodal goals. Powered by GPT-4V, it can solve complex planning problems, even in a zero-shot mode. VILA significantly reduces errors and performs outstanding tasks requiring spatial arrangements, object attributes, and commonsense knowledge.

In conclusion, VILA is a highly innovative robotic planning method that effectively translates high-level language instructions into actionable steps. Its ability to integrate perceptual data and comprehend commonsense knowledge in the visual world makes it superior to existing LLM-based planners, particularly in addressing complex, long-horizon tasks. However, it is important to note that VILA has some limitations, such as reliance on a black-box VLM and lack of in-context examples, which suggest that future enhancements are necessary to overcome these challenges.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 33k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

Sana Hassan, a consulting intern at Marktechpost and dual-degree student at IIT Madras, is passionate about applying technology and AI to address real-world challenges. With a keen interest in solving practical problems, he brings a fresh perspective to the intersection of AI and real-life solutions.