The introduction of Augmented Reality (AR) and wearable Artificial Intelligence (AI) gadgets is a significant advancement in human-computer interaction. With AR and AI gadgets facilitating data collection, there are new possibilities to develop highly contextualized and personalized AI assistants that function as an extension of the wearer’s cognitive processes.

Currently, existing multimodal AI assistants, like GPT-4v, Gemini, and Llava, have the ability to integrate context from a variety of texts, photos, and videos. Their comprehension of context is restricted to data that users consciously enter, or that is accessible via the public internet. Future AI assistants will be able to access and analyze various contextual information gathered from the wearer’s activities and surroundings. However, achieving this goal will be a difficult technological task, as it requires vast and diverse datasets to support the training of such complex AI models.

Conventional datasets, mostly from video cameras, are not up to par in a number of ways. They don’t have the multimodal sensor data that future augmented reality devices should have, like accurate motion tracking, extensive environmental context, and detailed 3D spatial information. They fail to capture the subtle personal context required to infer a wearer’s behaviors or intents reliably, nor do they offer temporal and spatial synchronization across many required data types and sources.

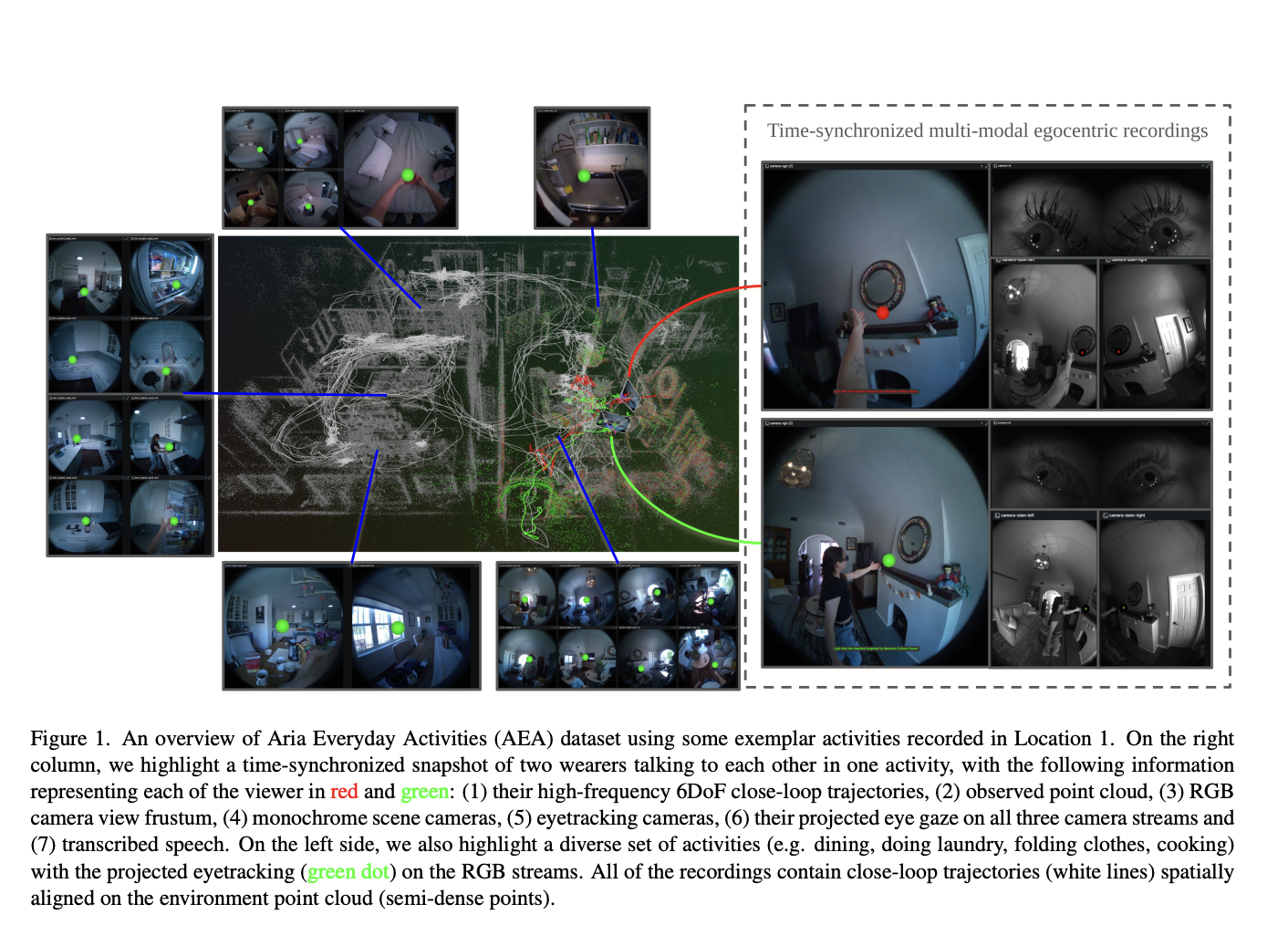

To overcome this, a team of researchers from Meta has introduced the Aria Everyday Activities (AEA) dataset. The AEA dataset has been recorded with Project Aria devices’ powerful sensor platform. It provides a rich, multimodal, four-dimensional view of daily activities from the wearer’s point of view. It comprises spatial audio, high-definition video, eye-tracking data, inertial measures, and more, all of which are aligned in a shared 3D coordinate system and synchronized in time.

The AEA dataset provides an abundance of egocentric multimodal information as it consists of 143 sequences of daily activities recorded by several people in five different indoor locales. The recordings contain a wide range of sensor data gathered using the advanced Project Aria glasses.

This dataset offers processed data from some great machine perception services in addition to the raw sensor data required to create new AI capabilities. This gives a pseudo-ground truth that can be used to train and assess AI models. Multimodal sensor data is one of the main elements of the AEA dataset, offering a complete picture of the wearer’s environment and experiences.

The dataset has also been enhanced for research and applications by the addition of machine perception data. This machine perception data includes time-aligned voice transcriptions, per-frame 3D eye gaze vectors, high-frequency, globally aligned 3D trajectories, and detailed scene point clouds.

The AEA dataset has been presented with some research applications made possible by using it. Neural scene reconstruction and suggested segmentation are two examples of these applications. Neural scene reconstruction demonstrates the dataset’s potential to provide advanced spatial comprehension by building detailed 3D models of environments from egocentric data. Conversely, prompt segmentation shows how the dataset may be used to create algorithms that can recognize and follow things in the surrounding space using cues like voice instructions and eye gaze.

In conclusion, the Aria Daily Activities Dataset is a noteworthy addition to the domain of egocentric multimodal datasets.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and Google News. Join our 38k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our Telegram Channel

You may also like our FREE AI Courses….

Tanya Malhotra is a final year undergrad from the University of Petroleum & Energy Studies, Dehradun, pursuing BTech in Computer Science Engineering with a specialization in Artificial Intelligence and Machine Learning.

She is a Data Science enthusiast with good analytical and critical thinking, along with an ardent interest in acquiring new skills, leading groups, and managing work in an organized manner.