Oversampling and Undersampling, Explained: A Visual Guide with Mini 2D Dataset

DATA PREPROCESSINGArtificially generating and deleting data for the greater good⛳️ More DATA PREPROCESSING, explained: · Missing Value Imputation · Categorical Encoding · Data Scaling · Discretization ▶ Oversampling & UndersamplingCollecting a dataset where each class has exactly the same number of class to predict can be a challenge. In reality, things are rarely perfectly balanced, and when you are making a classification model, this can be an issue. When a model is trained on such dataset, where one class has more examples than the other, it has usually become better at predicting the bigger groups and worse at predicting the smaller ones. To help with this issue, we can use tactics like oversampling and undersampling — creating more examples of the smaller group or removing some examples from the bigger group.There are many different oversampling and undersampling methods (with intimidating names like SMOTE, ADASYN, and Tomek Links) out there but there doesn’t seem to be many resources that visually compare how they work. So, here, we will use one simple 2D dataset to show the changes that occur in the data after applying those methods so we can see how different the output of each method is. You will see in the visuals that these various approaches give different solutions, and who knows, one might be suitable for your specific machine learning challenge!All visuals: Author-created using Canva Pro. Optimized for mobile; may appear oversized on desktop.DefinitionOversamplingOversampling make a dataset more balanced when one group has a lot fewer examples than the other. The way it works is by making more copies of the examples from the smaller group. This helps the dataset represent both groups more equally.UndersamplingOn the other hand, undersampling works by deleting some of the examples from the bigger group until it’s almost the same in size to the smaller group. In the end, the dataset is smaller, sure, but both groups will have a more similar number of examples.Hybrid SamplingCombining oversampling and undersampling can be called “hybrid sampling”. It increases the size of the smaller group by making more copies of its examples and also, it removes some of example of the bigger group by removing some of its examples. It tries to create a dataset that is more balanced — not too big and not too small.???? Dataset UsedLet’s use a simple artificial golf dataset to show both oversampling and undersampling. This dataset shows what kind of golf activity a person do in a particular weather condition.Columns: Temperature (0–3), Humidity (0–3), Golf Activity (A=Normal Course, B=Drive Range, or C=Indoor Golf). The training dataset has 2 dimensions and 9 samples.⚠️ Note that while this small dataset is good for understanding the concepts, in real applications you’d want much larger datasets before applying these techniques, as sampling with too little data can lead to unreliable results.Oversampling MethodsRandom OversamplingRandom Oversampling is a simple way to make the smaller group bigger. It works by making duplicates of the examples from the smaller group until all the classes are balanced.???? Best for very small datasets that need to be balanced quickly???? Not recommended for complicated datasetsRandom Oversampling simply duplicates selected samples from the smaller group (A) while keeping all samples from the bigger groups (B and C) unchanged, as shown by the A×2 markings in the right plot.SMOTESMOTE (Synthetic Minority Over-sampling Technique) is an oversampling technique that makes new examples by interpolating the smaller group. Unlike the random oversampling, it doesn’t just copy what’s there but it uses the examples of the smaller group to generate some examples between them.???? Best when you have a decent amount of examples to work with and need variety in your data???? Not recommended if you have very few examples???? Not recommended if data points are too scattered or noisySMOTE creates new A samples by selecting pairs of A points and placing new points somewhere along the line between them. Similarly, a new B point is placed between pairs of randomly chosen B pointsADASYNADASYN (Adaptive Synthetic) is like SMOTE but focuses on making new examples in the harder-to-learn parts of the smaller group. It finds the examples that are trickiest to classify and makes more new points around those. This helps the model better understand the challenging areas.???? Best when some parts of your data are harder to classify than others???? Best for complex datasets with challenging areas???? Not recommended if your data is fairly simple and straightforwardADASYN creates more synthetic points from the smaller group (A) in ‘difficult areas’ where A points are close to other groups (B and C). It also generates new B points in similar areas.Undersampling MethodsUndersampling shrinks the bigger group to make it closer in size to the smaller group. There are some ways of doing this:Random UndersamplingRan

DATA PREPROCESSING

Artificially generating and deleting data for the greater good

⛳️ More DATA PREPROCESSING, explained:

· Missing Value Imputation

· Categorical Encoding

· Data Scaling

· Discretization

▶ Oversampling & Undersampling

Collecting a dataset where each class has exactly the same number of class to predict can be a challenge. In reality, things are rarely perfectly balanced, and when you are making a classification model, this can be an issue. When a model is trained on such dataset, where one class has more examples than the other, it has usually become better at predicting the bigger groups and worse at predicting the smaller ones. To help with this issue, we can use tactics like oversampling and undersampling — creating more examples of the smaller group or removing some examples from the bigger group.

There are many different oversampling and undersampling methods (with intimidating names like SMOTE, ADASYN, and Tomek Links) out there but there doesn’t seem to be many resources that visually compare how they work. So, here, we will use one simple 2D dataset to show the changes that occur in the data after applying those methods so we can see how different the output of each method is. You will see in the visuals that these various approaches give different solutions, and who knows, one might be suitable for your specific machine learning challenge!

Definition

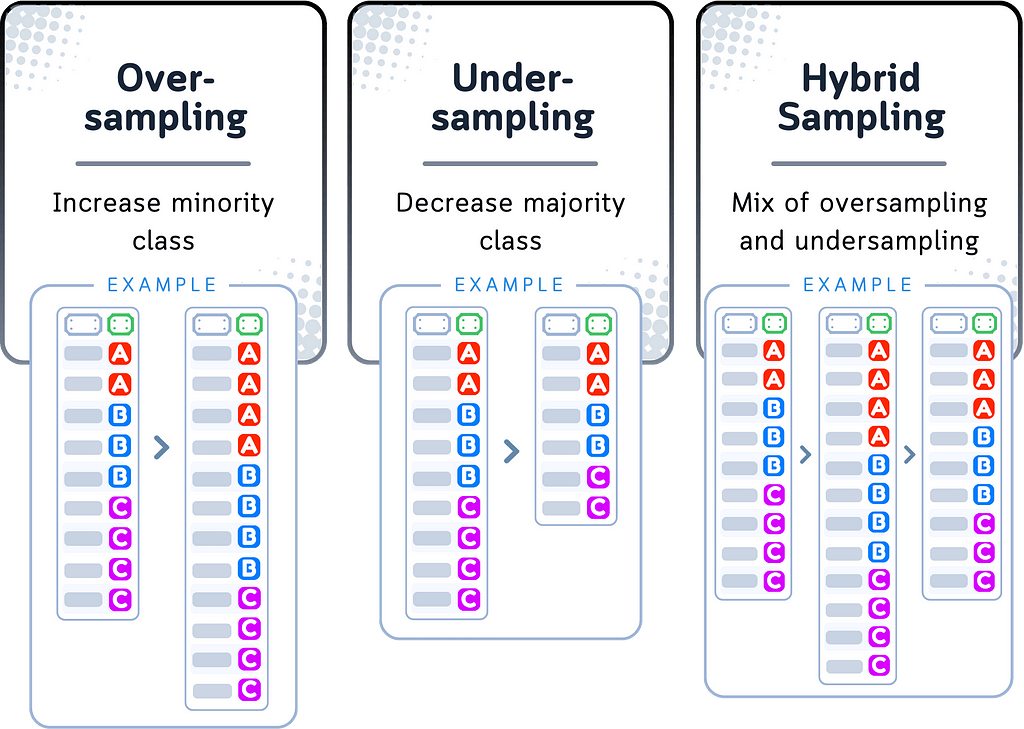

Oversampling

Oversampling make a dataset more balanced when one group has a lot fewer examples than the other. The way it works is by making more copies of the examples from the smaller group. This helps the dataset represent both groups more equally.

Undersampling

On the other hand, undersampling works by deleting some of the examples from the bigger group until it’s almost the same in size to the smaller group. In the end, the dataset is smaller, sure, but both groups will have a more similar number of examples.

Hybrid Sampling

Combining oversampling and undersampling can be called “hybrid sampling”. It increases the size of the smaller group by making more copies of its examples and also, it removes some of example of the bigger group by removing some of its examples. It tries to create a dataset that is more balanced — not too big and not too small.

???? Dataset Used

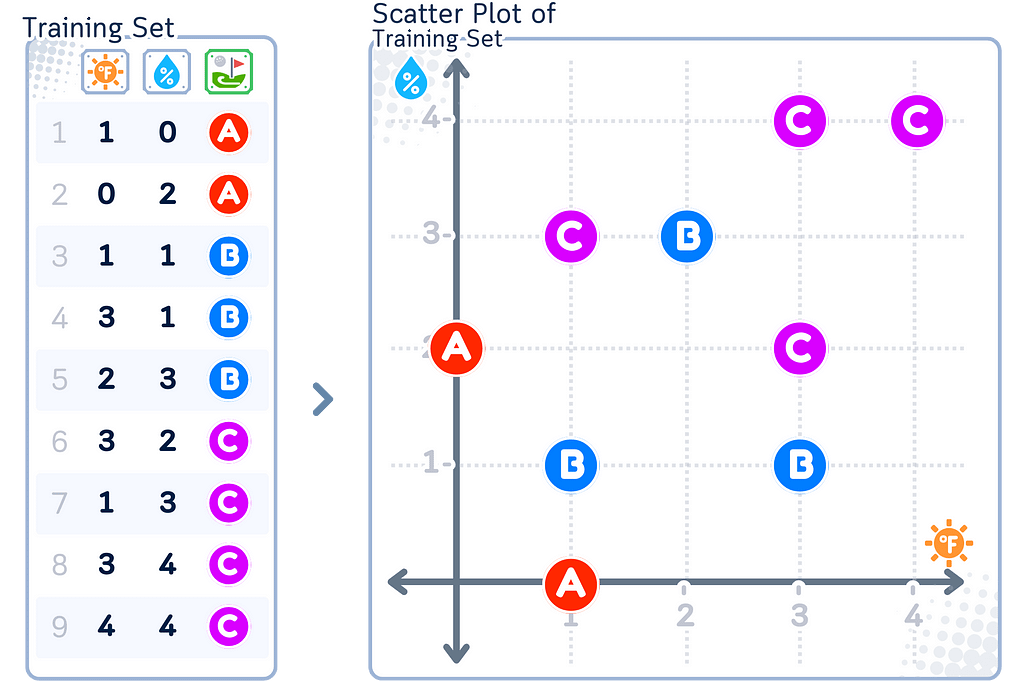

Let’s use a simple artificial golf dataset to show both oversampling and undersampling. This dataset shows what kind of golf activity a person do in a particular weather condition.

⚠️ Note that while this small dataset is good for understanding the concepts, in real applications you’d want much larger datasets before applying these techniques, as sampling with too little data can lead to unreliable results.

Oversampling Methods

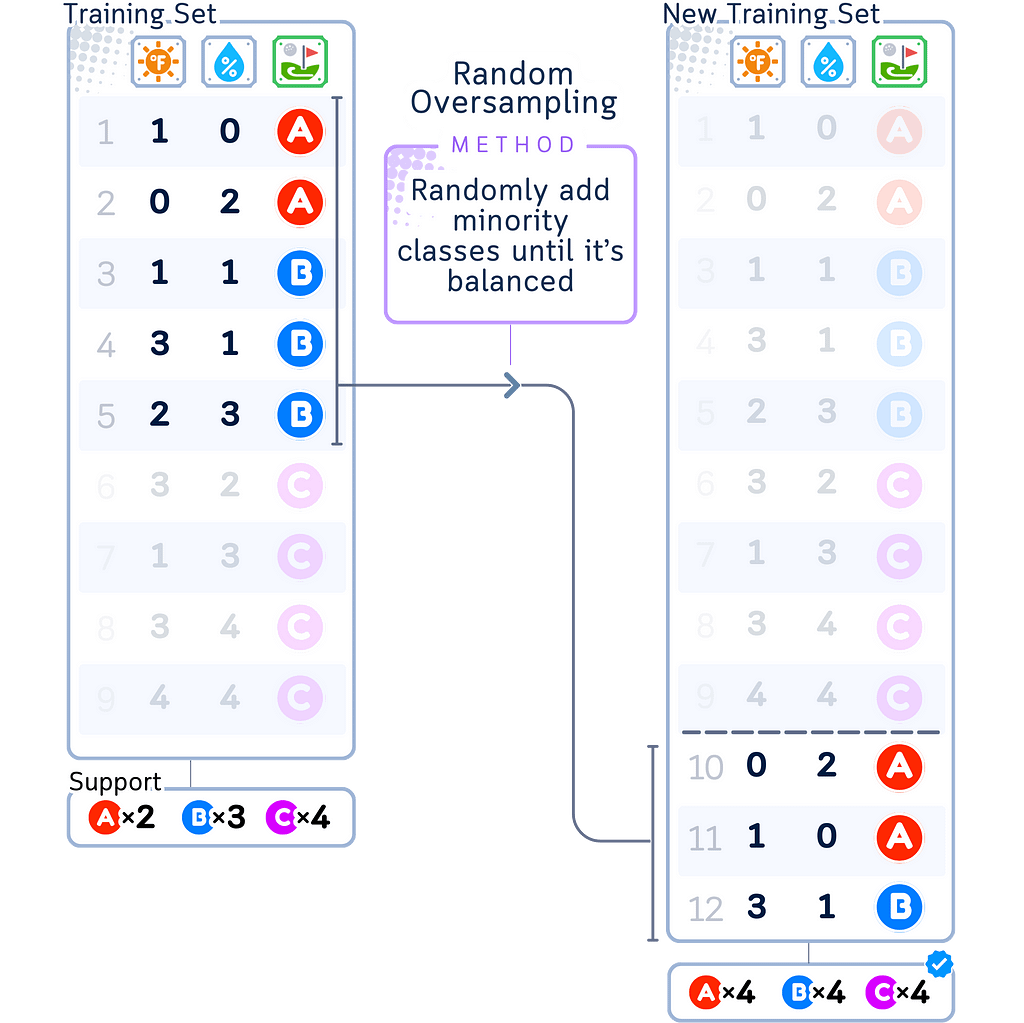

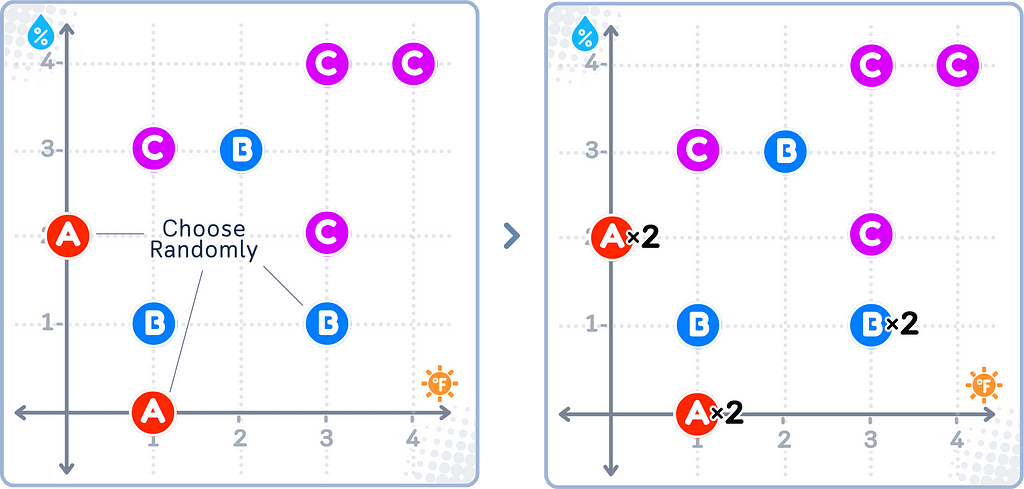

Random Oversampling

Random Oversampling is a simple way to make the smaller group bigger. It works by making duplicates of the examples from the smaller group until all the classes are balanced.

???? Best for very small datasets that need to be balanced quickly

???? Not recommended for complicated datasets

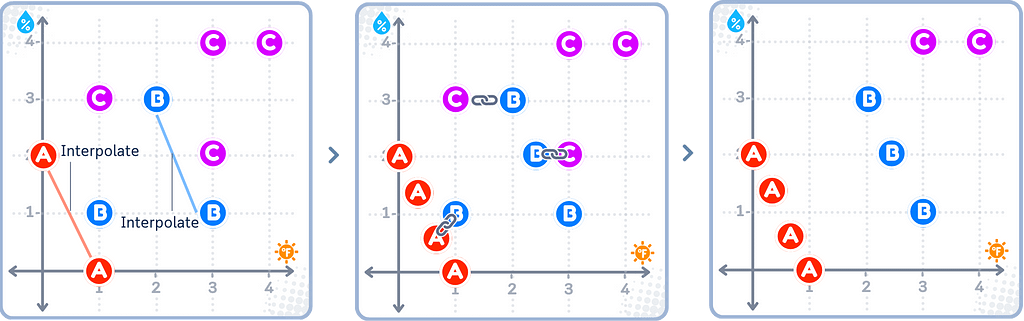

SMOTE

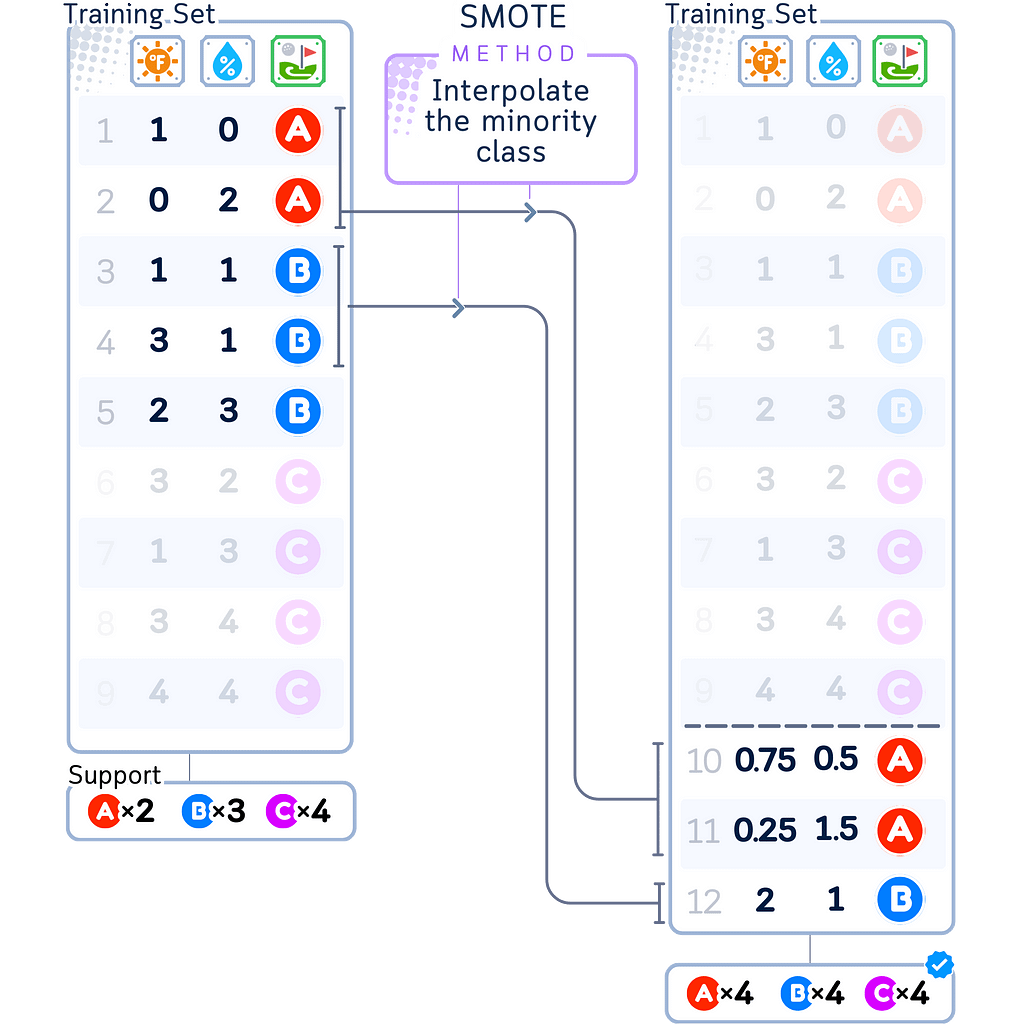

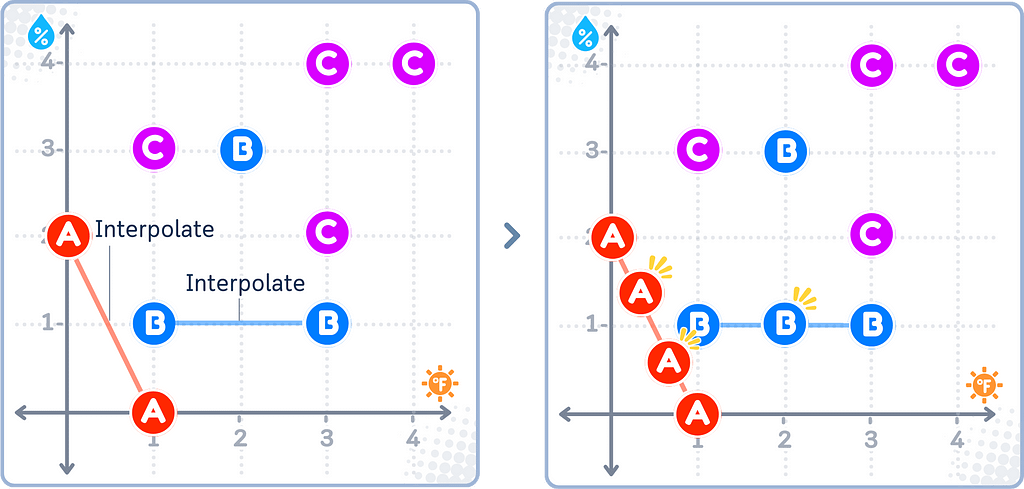

SMOTE (Synthetic Minority Over-sampling Technique) is an oversampling technique that makes new examples by interpolating the smaller group. Unlike the random oversampling, it doesn’t just copy what’s there but it uses the examples of the smaller group to generate some examples between them.

???? Best when you have a decent amount of examples to work with and need variety in your data

???? Not recommended if you have very few examples

???? Not recommended if data points are too scattered or noisy

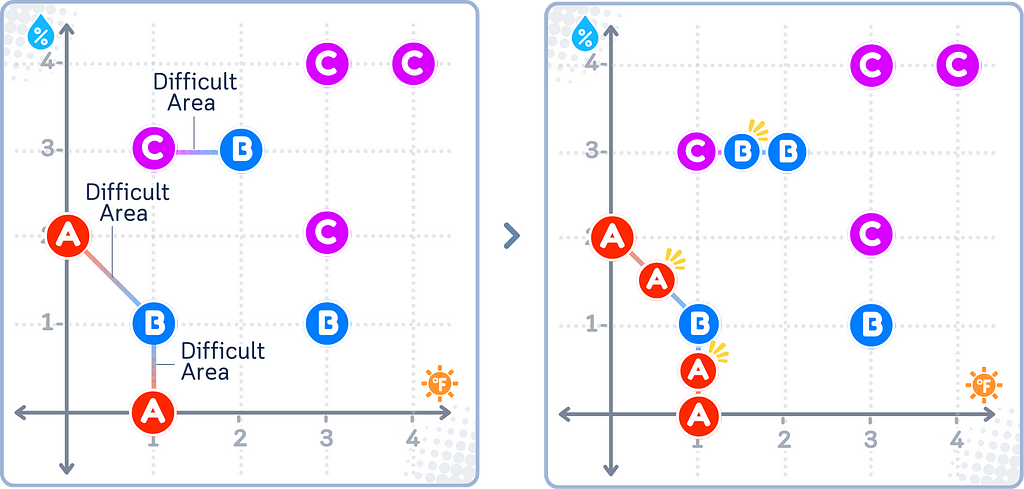

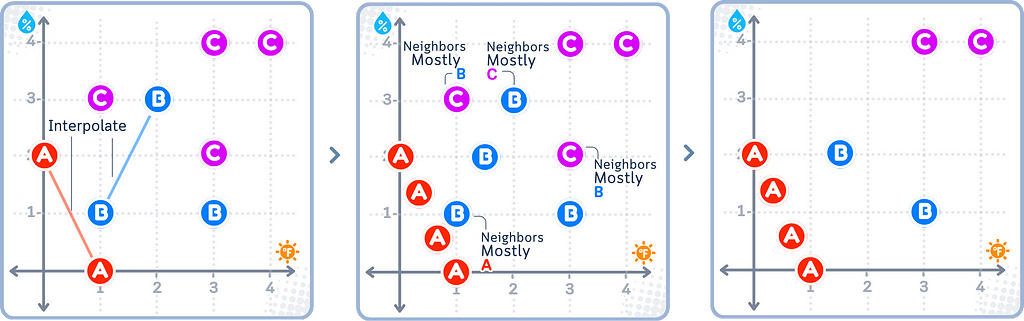

ADASYN

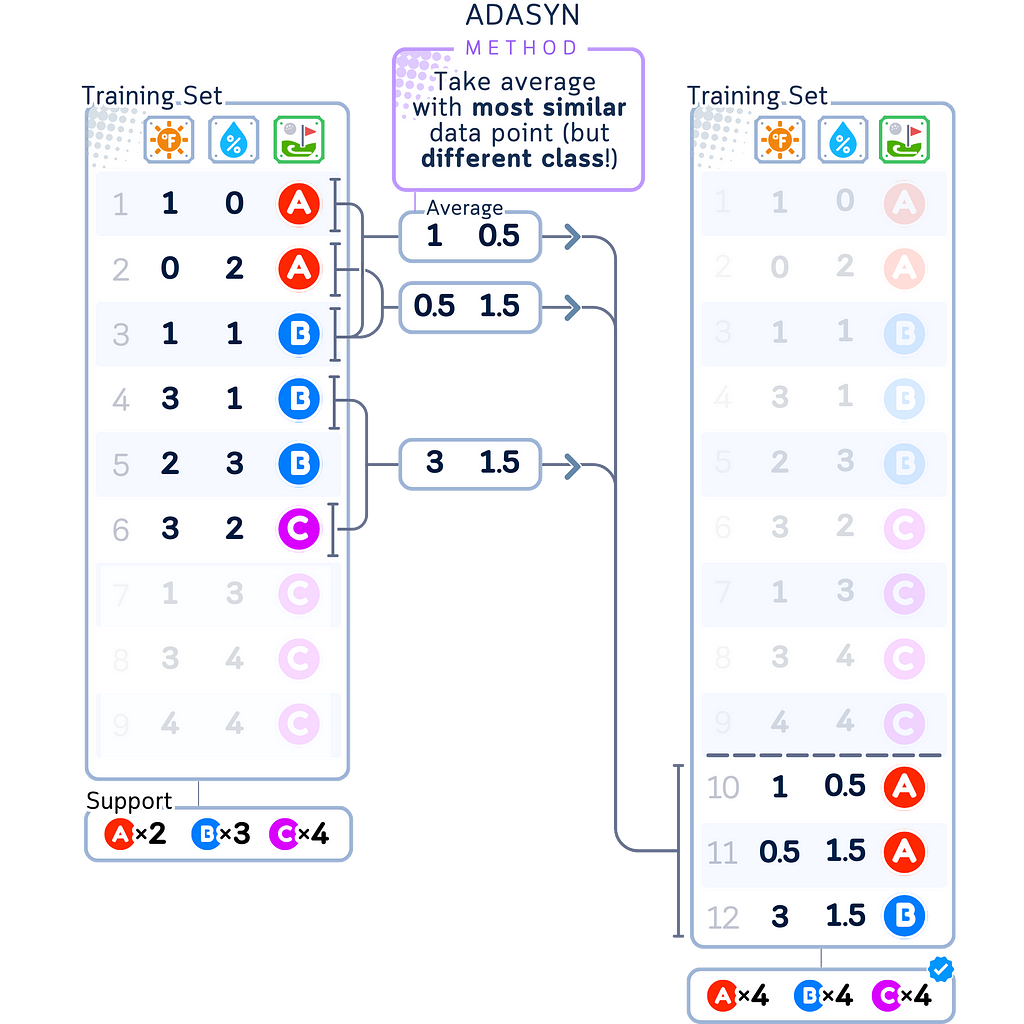

ADASYN (Adaptive Synthetic) is like SMOTE but focuses on making new examples in the harder-to-learn parts of the smaller group. It finds the examples that are trickiest to classify and makes more new points around those. This helps the model better understand the challenging areas.

???? Best when some parts of your data are harder to classify than others

???? Best for complex datasets with challenging areas

???? Not recommended if your data is fairly simple and straightforward

Undersampling Methods

Undersampling shrinks the bigger group to make it closer in size to the smaller group. There are some ways of doing this:

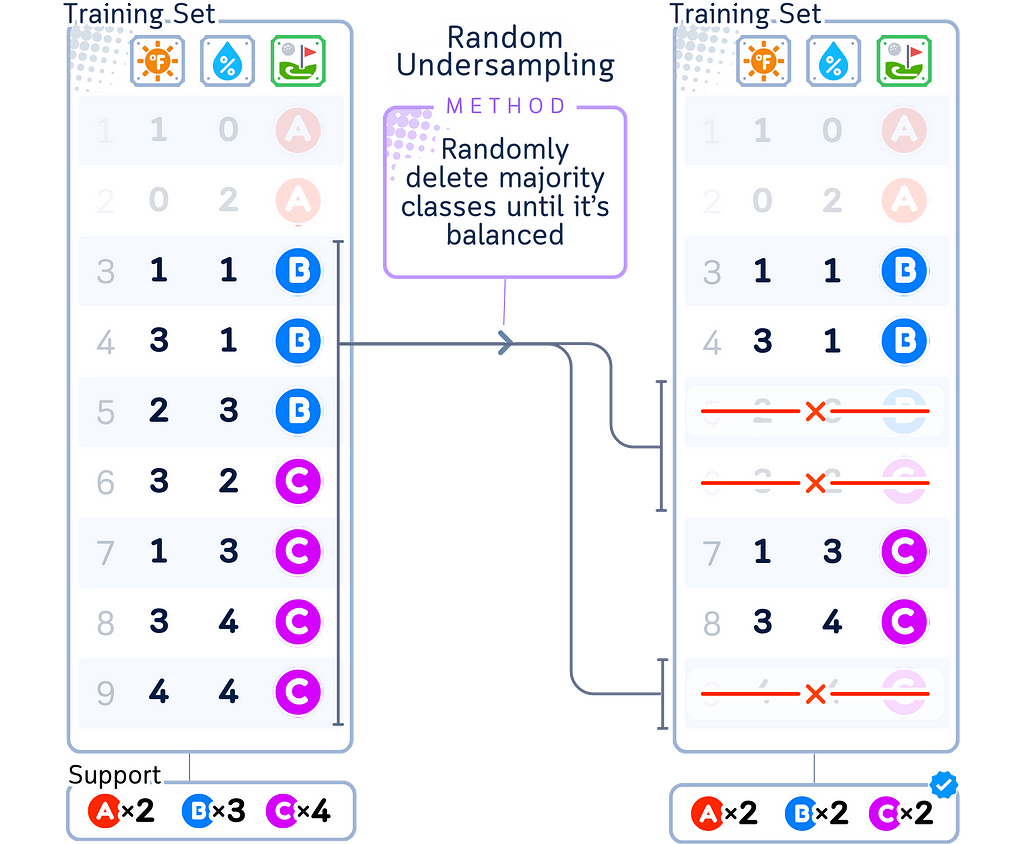

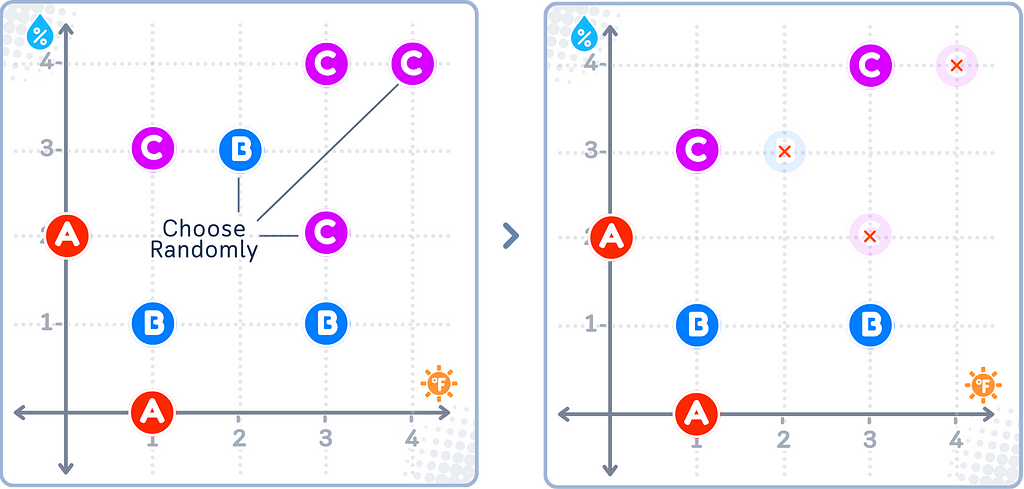

Random Undersampling

Random Undersampling removes examples from the bigger group at random until it’s the same size as the smaller group. Just like random oversampling the method is pretty simple, but it might get rid of important info that really show how different the groups are.

???? Best for very large datasets with lots of repetitive examples

???? Best when you need a quick, simple fix

???? Not recommended if every example in your bigger group is important

???? Not recommended if you can’t afford losing any information

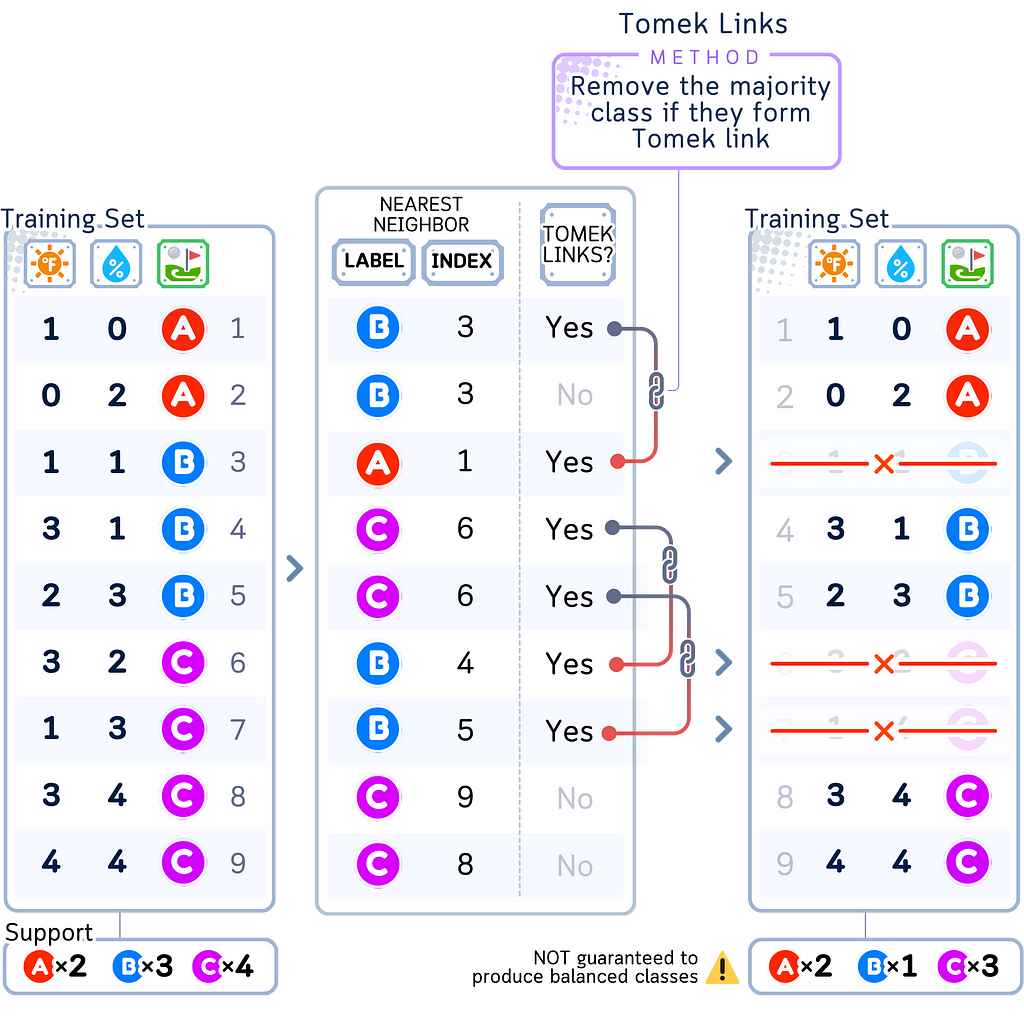

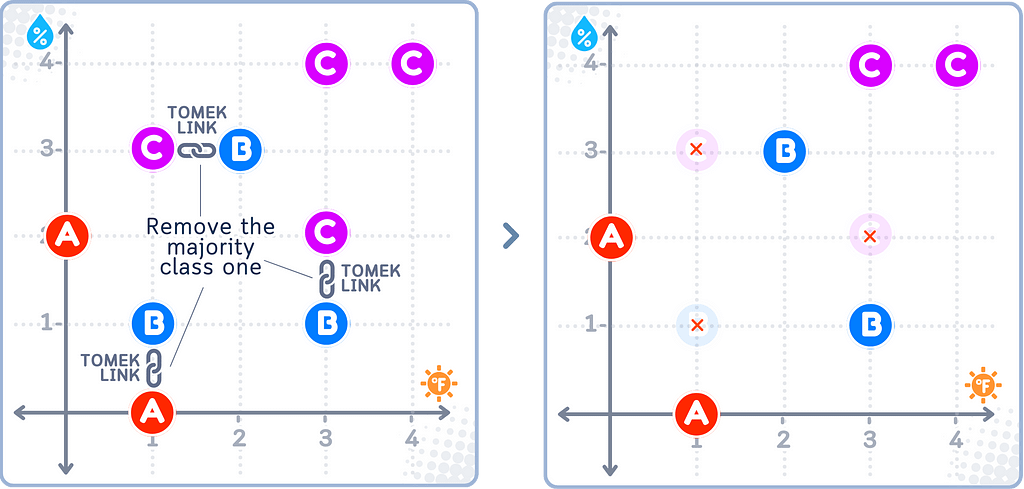

Tomek Links

Tomek Links is an undersampling method that makes the “lines” between groups clearer. It searches for pairs of examples from different groups that are really alike. When it finds a pair where the examples are each other’s closest neighbors but belong to different groups, it gets rid of the example from the bigger group.

???? Best when your groups overlap too much

???? Best for cleaning up messy or noisy data

???? Best when you need clear boundaries between groups

???? Not recommended if your groups are already well separated

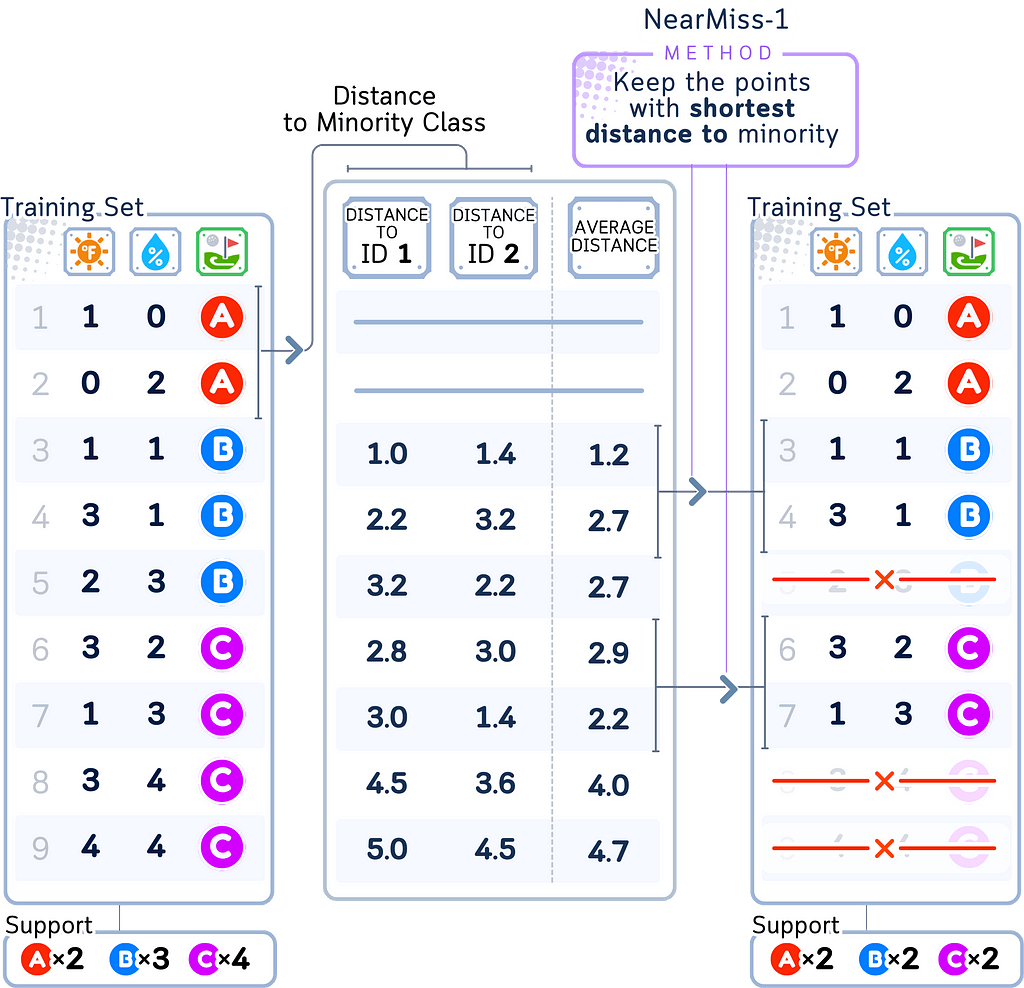

Near Miss

Near Miss is a set of undersampling techniques that works on different rules:

- Near Miss-1: Keeps examples from the bigger group that are closest to the examples in the smaller group.

- Near Miss-2: Keeps examples from the bigger group that have the smallest average distance to their three closest neighbors in the smaller group.

- Near Miss-3: Keeps examples from the bigger group that are furthest away from other examples in their own group.

The main idea here is to keep the most informative examples from the bigger group and get rid of the ones that aren’t as important.

???? Best when you want control over which examples to keep

???? Not recommended if you need a simple, quick solution

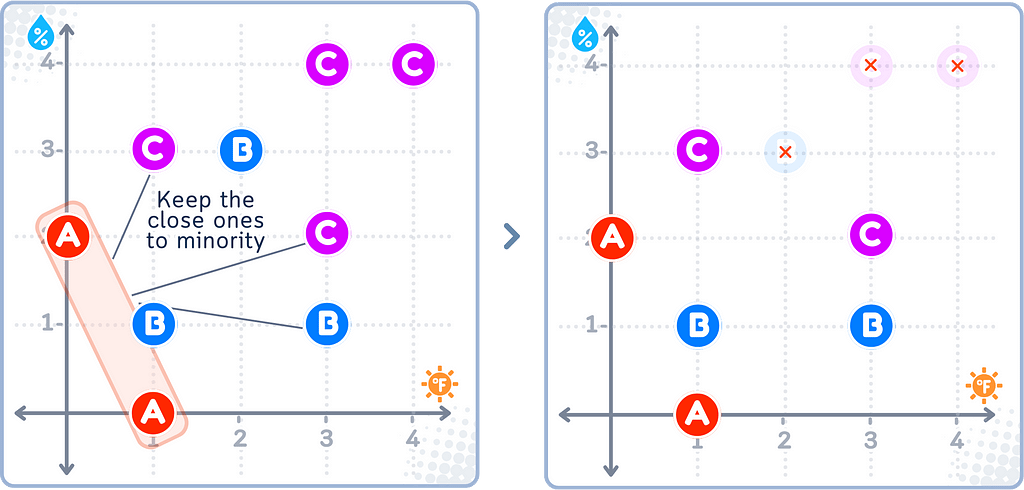

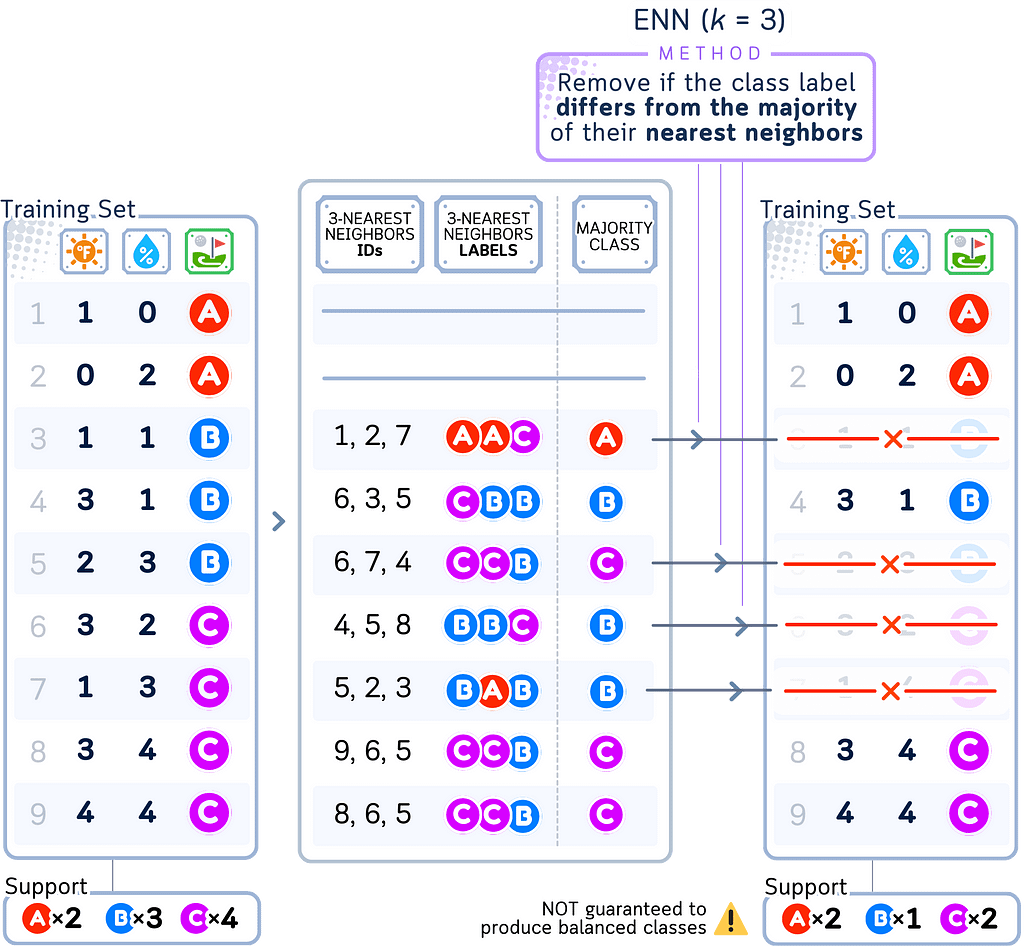

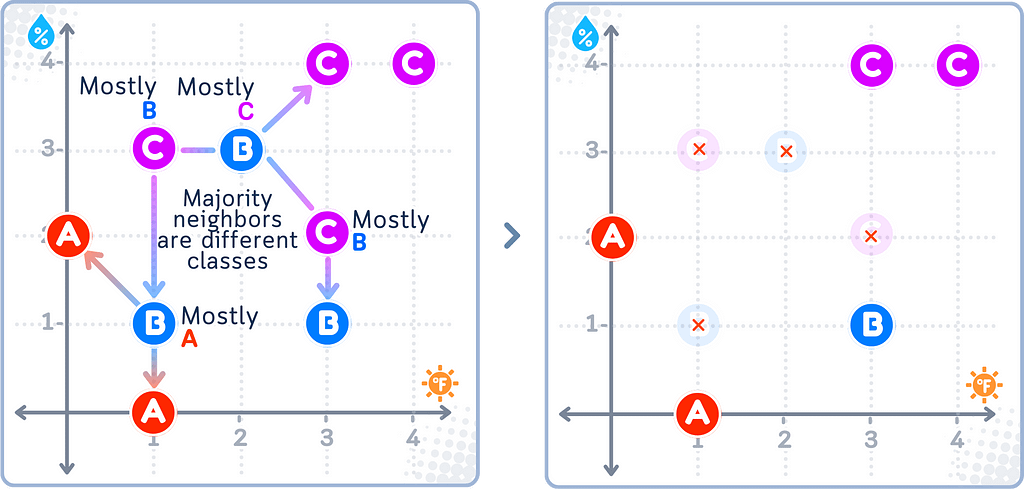

ENN

Edited Nearest Neighbors (ENN) method gets rid of examples that are probably noise or outliers. For each example in the bigger group, it checks whether most of its closest neighbors belong to the same group. If they don’t, it removes that example. This helps create cleaner boundaries between the groups.

???? Best for cleaning up messy data

???? Best when you need to remove outliers

???? Best for creating cleaner group boundaries

???? Not recommended if your data is already clean and well-organized

Hybrid sampling methods

SMOTETomek

SMOTETomek works by first creating new examples for the smaller group using SMOTE, then cleaning up messy boundaries by removing “confusing” examples using Tomek Links. This helps creating a more balanced dataset with clearer boundaries and less noise.

???? Best for unbalanced data that is really severe

???? Best when you need both more examples and cleaner boundaries

???? Best when dealing with noisy, overlapping groups

???? Not recommended if your data is already clean and well-organized

???? Not recommended for small dataset

SMOTEENN

SMOTEENN works by first creating new examples for the smaller group using SMOTE, then cleaning up both groups by removing examples that don’t fit well with their neighbors using ENN. Just like SMOTETomek, this helps create a cleaner dataset with clearer borders between the groups.

???? Best for cleaning up both groups at once

???? Best when you need more examples but cleaner data

???? Best when dealing with lots of outliers

???? Not recommended if your data is already clean and well-organized

???? Not recommended for small dataset

⚠️ Risks when using Resampling methods

Resampling methods can be helpful but there are some potential risks:

Oversampling:

- Making artificial samples can give false patterns that don’t exist in real life.

- Models can become too confident because of the synthetic samples. This will lead to serious failures when it is applied to real situation.

- There’s a risk of data leakage if resampling is done incorrectly (like before splitting the data for cross-validation.)

Undersampling:

- You may permanently lose important information.

- You can accidentally destroy important boundaries between classes, and will cause misunderstanding of the problem.

- You may create artificial class distributions that is too different compared to real-world conditions.

Hybrid Methods:

- Combining errors from both methods can make things worse instead of better.

When using resampling methods, it’s hard to find the right balance between getting class imbalance without changing the important patterns in your data. In my experience, incorrect resampling can actually harm model performance rather than improve it.

Before turning to resampling, try using models that naturally handle imbalanced data better, such as tree-based algorithms. Resampling should be part of a broader strategy rather than the only solution to address class imbalance.

???? Oversampling & Undersampling Code Summarized

For the code example, we will use the methods provided by imblearn library:

import pandas as pd

from imblearn.over_sampling import SMOTE, ADASYN, RandomOverSampler

from imblearn.under_sampling import TomekLinks, NearMiss, RandomUnderSampler

from imblearn.combine import SMOTETomek, SMOTEENN

# Create a DataFrame from the dataset

data = {

'Temperature': [1, 0, 1, 3, 2, 3, 1, 3, 4],

'Humidity': [0, 2, 1, 1, 3, 2, 3, 4, 4],

'Activity': ['A', 'A', 'B', 'B', 'B', 'C', 'C', 'C', 'C']

}

df = pd.DataFrame(data)

# Split the data into features (X) and target (y)

X, y = df[['Temperature', 'Humidity']], df['Activity'].astype('category')

# Initialize a resampling method

# sampler = RandomOverSampler() # Random OverSampler for oversampling

sampler = SMOTE() # SMOTE for oversampling

# sampler = ADASYN() # ADASYN for oversampling

# sampler = RandomUnderSampler() # Random UnderSampler for undersampling

# sampler = TomekLinks() # Tomek Links for undersampling

# sampler = NearMiss(version=1) # NearMiss-1 for undersampling

# sampler = EditedNearestNeighbours() # ENN for undersampling

# sampler = SMOTETomek() # SMOTETomek for a combination of oversampling & undersampling

# sampler = SMOTEENN() # SMOTEENN for a combination of oversampling & undersampling

# Apply the resampling method

X_resampled, y_resampled = sampler.fit_resample(X, y)

# Print the resampled dataset

print("Resampled dataset:")

print(X_resampled)

print(y_resampled)

Technical Environment

This article uses Python 3.7, pandas 1.3, and imblearn 1.2. While the concepts discussed are generally applicable, specific code implementations may vary slightly with different versions.

About the Illustrations

Unless otherwise noted, all images are created by the author, incorporating licensed design elements from Canva Pro.

Oversampling and Undersampling, Explained: A Visual Guide with Mini 2D Dataset was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.