Deep learning has revolutionized view synthesis in computer vision, offering diverse approaches like NeRF and end-to-end style architectures. Traditionally, 3D modeling methods like voxels, point clouds, or meshes were employed. NeRF-based techniques implicitly represent 3D scenes using MLPs. Recent advancements focus on image-to-image approaches, generating novel views from collections of scene images. These methods often require costly re-training per scene, precise pose information, or help with variable input views at test time. Despite their strengths, each approach has limitations, underscoring the ongoing challenges in this field.

Researchers from the Department of Computer Science and the Neuroscience and Biomedical Engineering at Aalto University, Finland, System 2 AI, and Finnish Center for Artificial Intelligence FCAI. have developed. ViewFusion is an advanced generative method for view synthesis. It employs diffusion denoising and pixel-weighting to combine informative input views, addressing previous limitations. ViewFusion is trainable across diverse scenes, adapts to varying input views, and generates high-quality results even in challenging conditions. Though it doesn’t create a 3D scene embedding and has slower inference, it outperforms existing methods on the NMR dataset.

View synthesis has explored approaches, from NeRFs to end-to-end architectures and diffusion probabilistic models. NeRFs optimize a continuous volumetric scene function but struggle with generalization and require significant retraining for different objects. End-to-end methods like Equivariant Neural Renderer and Scene Representation Transformers offer promising results but lack variability in output and often require explicit pose information. Diffusion probabilistic models leverage stochastic processes for high-quality outputs, but pre-trained backbone reliance and limited flexibility pose challenges. Despite their strengths, existing methods have drawbacks like inflexibility and dependence on specific data structures.

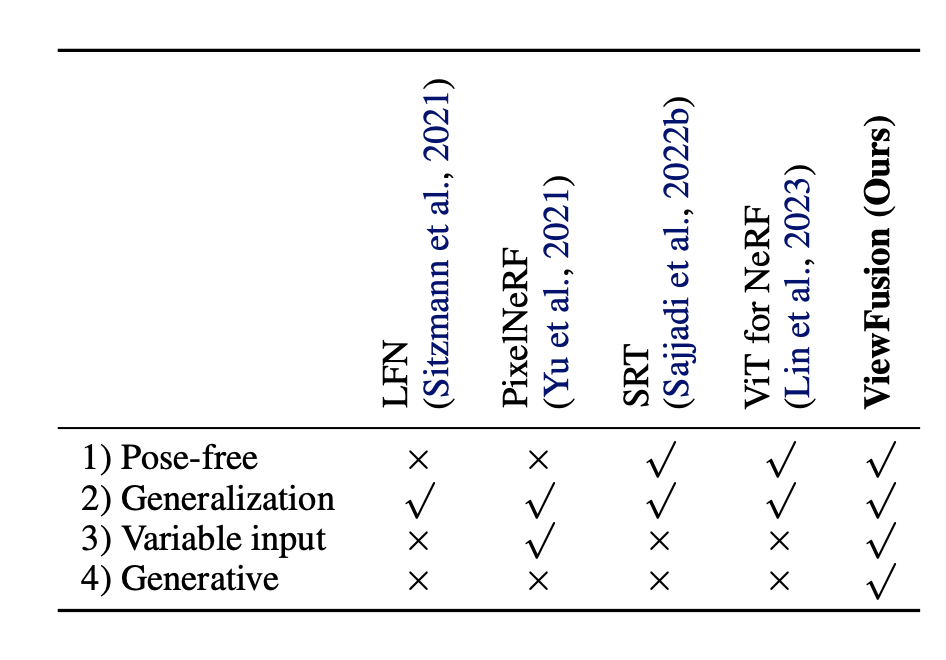

ViewFusion is an end-to-end generative approach to view synthesis that applies a diffusion denoising step to input views and combines noise gradients with a pixel-weighting mask. The model employs a composable diffusion probabilistic framework to generate views from an unordered collection of input views and a target viewing direction. The approach is evaluated using commonly used metrics such as PSNR, SSIM, and LPIPS and compared to state-of-the-art methods for novel view synthesis. The proposed approach resolves the limitations of previous methods by being trainable and generalizing across multiple scenes and object classes, adaptively taking in a variable number of pose-free views, and generating plausible views even in severely undetermined conditions.

ViewFusion’s approach to view synthesis achieves top-tier performance in key metrics like PSNR, SSIM, and LPIPS. Evaluated on the diverse NMR dataset, it consistently matches or surpasses current state-of-the-art methods. ViewFusion excels in handling various scenarios, even in challenging, underdetermined conditions. Its adaptability shines through its capability to seamlessly incorporate varying numbers of pose-free views during training and inference stages, consistently delivering high-quality results regardless of input view count. Leveraging its generative nature, ViewFusion produces realistic views comparable to or surpassing existing state-of-the-art techniques.

In conclusion, ViewFusion is a groundbreaking solution for view synthesis, boasting state-of-the-art performance across metrics like PSNR, SSIM, and LPIPS. Its adaptability and flexibility surpass previous methods by seamlessly accommodating various pose-free views and generating high-quality outputs, even in challenging, underdetermined scenarios. By introducing a weighting scheme and leveraging composable diffusion models, ViewFusion sets a new standard in the field. Beyond its immediate application, the generative nature of ViewFusion holds promise for addressing broader problems, marking it as a significant contribution with potential applications beyond novel view synthesis.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.

Join our 37k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our Telegram Channel

Sana Hassan, a consulting intern at Marktechpost and dual-degree student at IIT Madras, is passionate about applying technology and AI to address real-world challenges. With a keen interest in solving practical problems, he brings a fresh perspective to the intersection of AI and real-life solutions.