AI Quantum Intelligence

Welcome to our AI-driven news website, where innovation meets information. In today’s fast-paced world, staying informed is essential, and our platform takes news consumption to the next level. “AI Quantum Intelligence” advanced algorithms curate and deliver the most pertinent stories, ensuring you receive the latest updates that matter most to you.

data-analytics

UNLOCK TOMORROW'S POSSIBILITIES

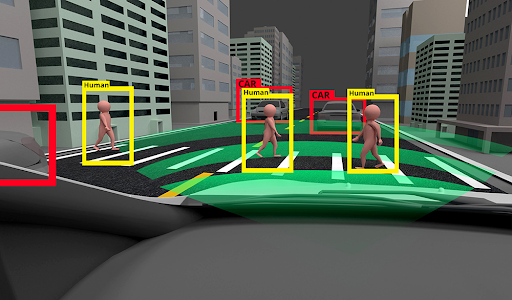

Discover the unparalleled synergy between humanity and artificial intelligence, where innovation meets collaboration, reshaping industries, enriching experiences, and charting the course for a technologically empowered future.

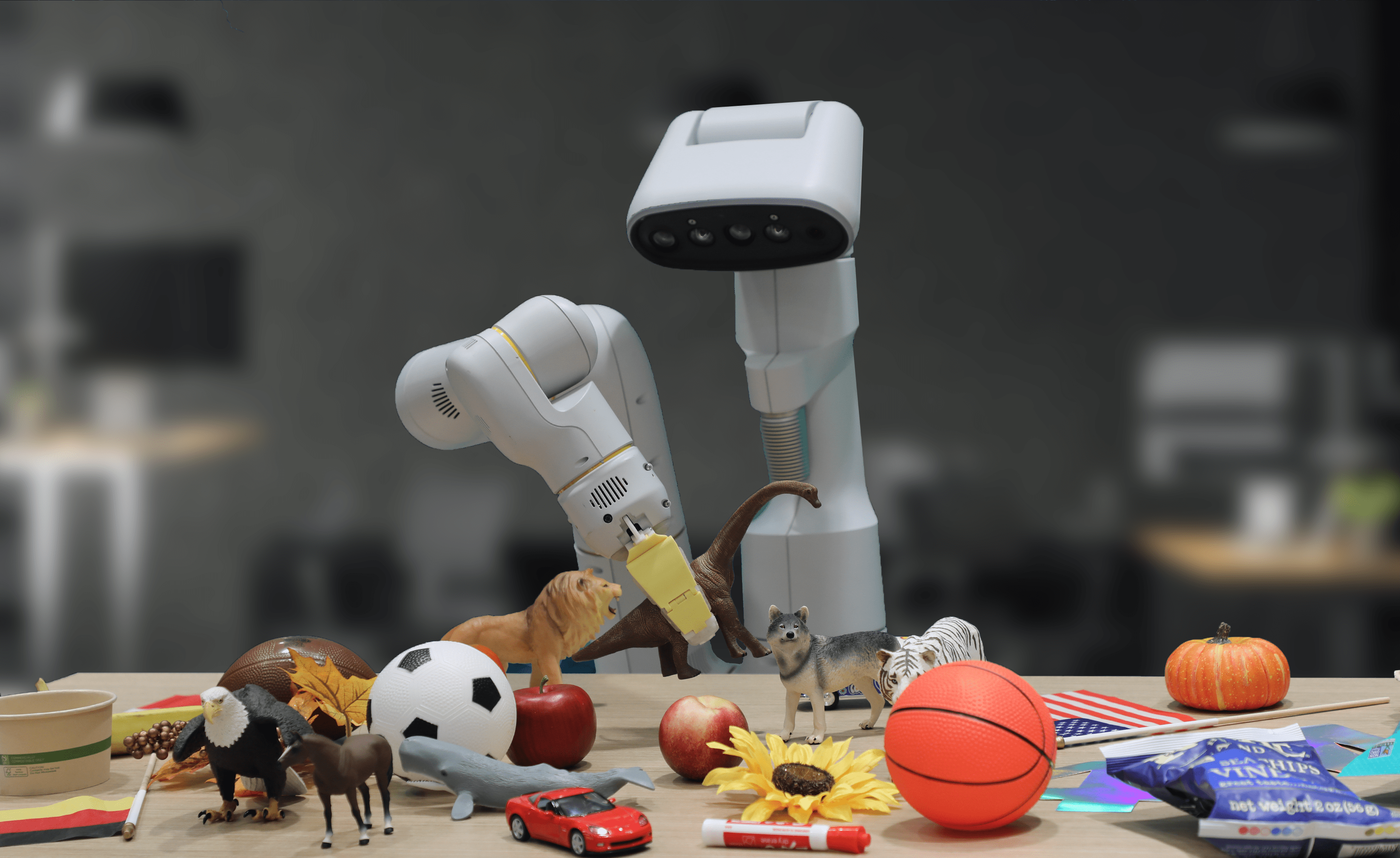

Explore the frontier where artificial intelligence breathes life into intelligent machines, revolutionizing industries, enhancing efficiency, and paving the way for a world where robotics and AI converge to redefine what's possible.

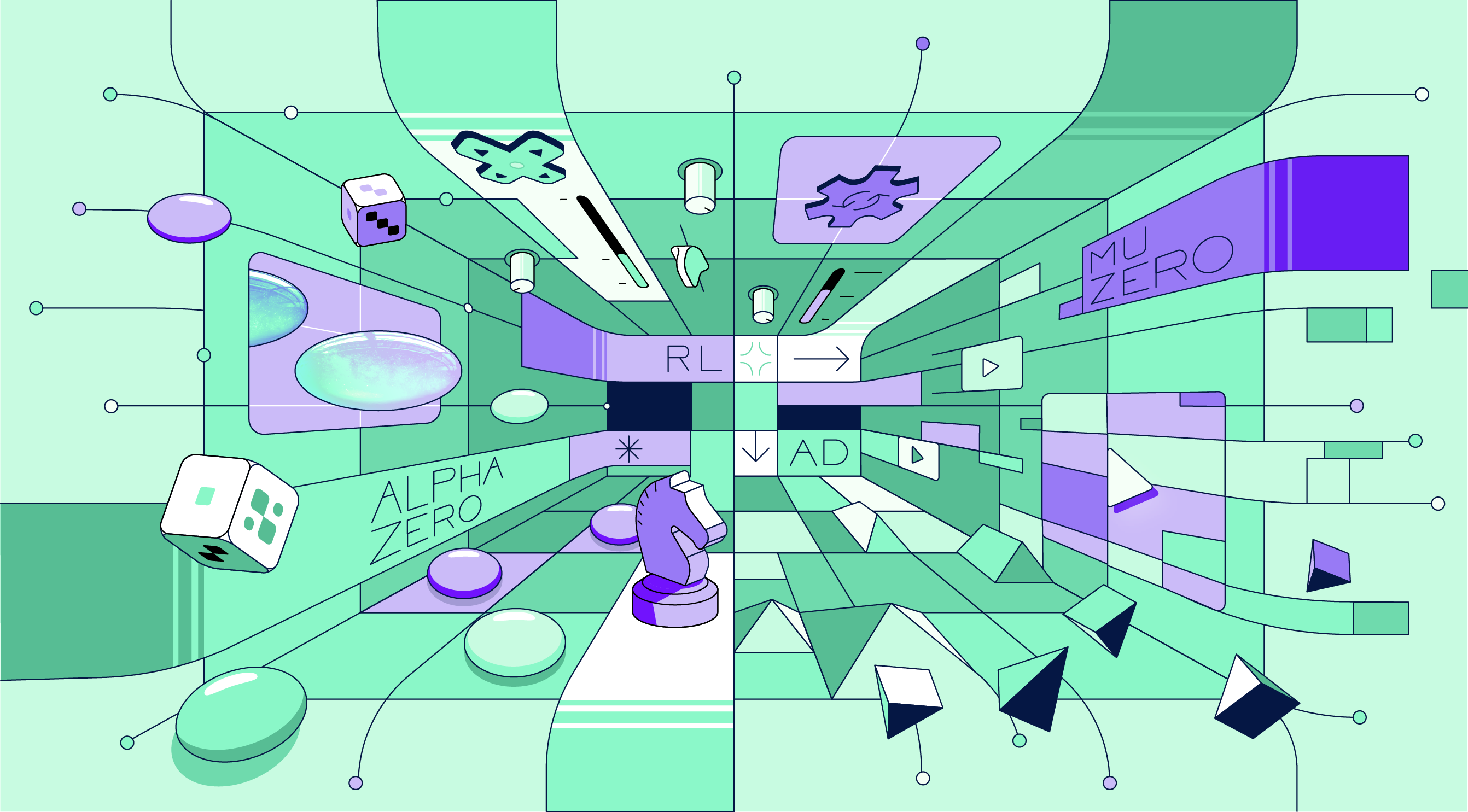

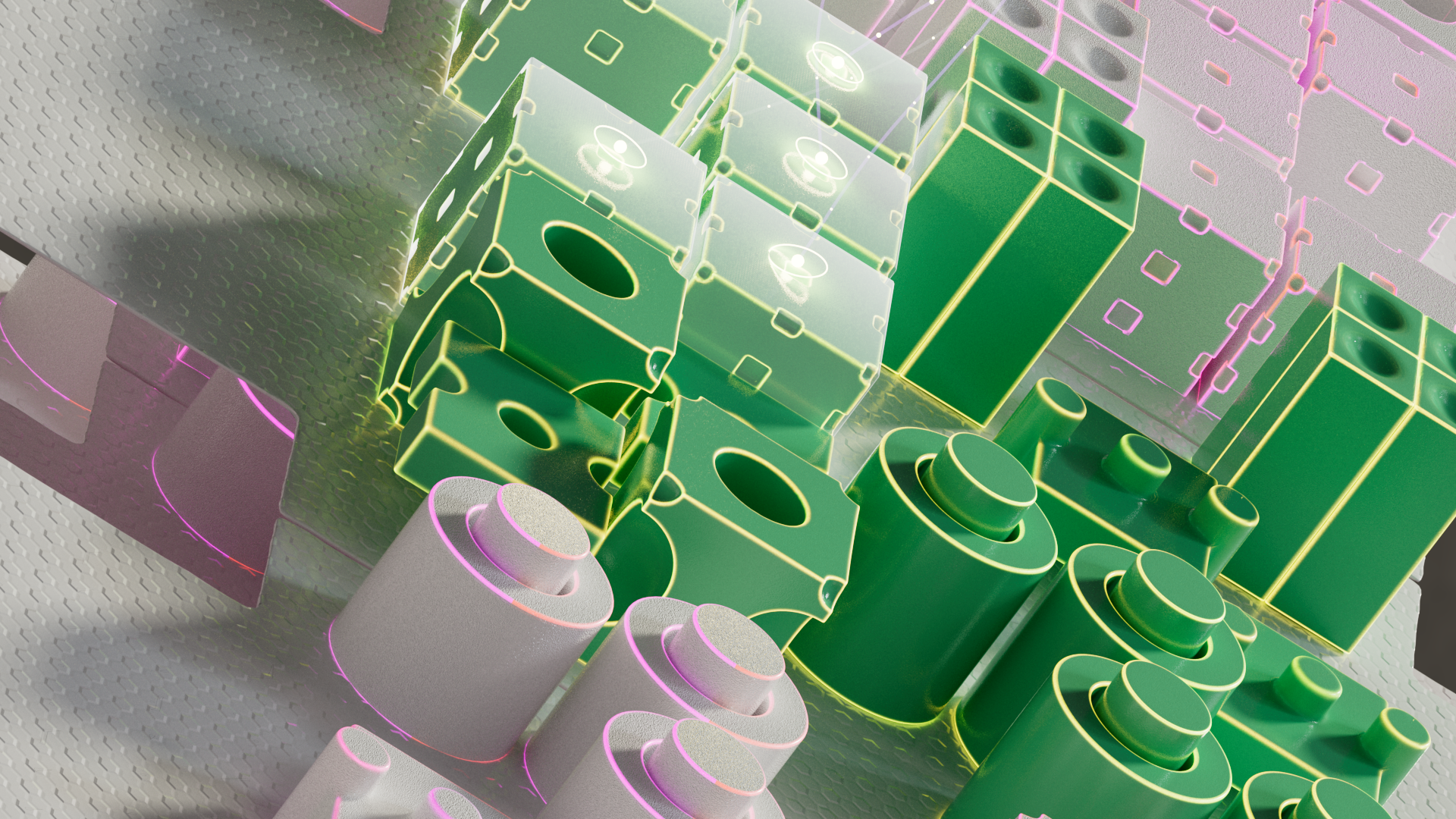

Dive into the transformative realm where machine learning and artificial intelligence converge, unraveling the algorithms that drive innovation, automate processes, and unlock the potential for groundbreaking advancements across industries and technologies.