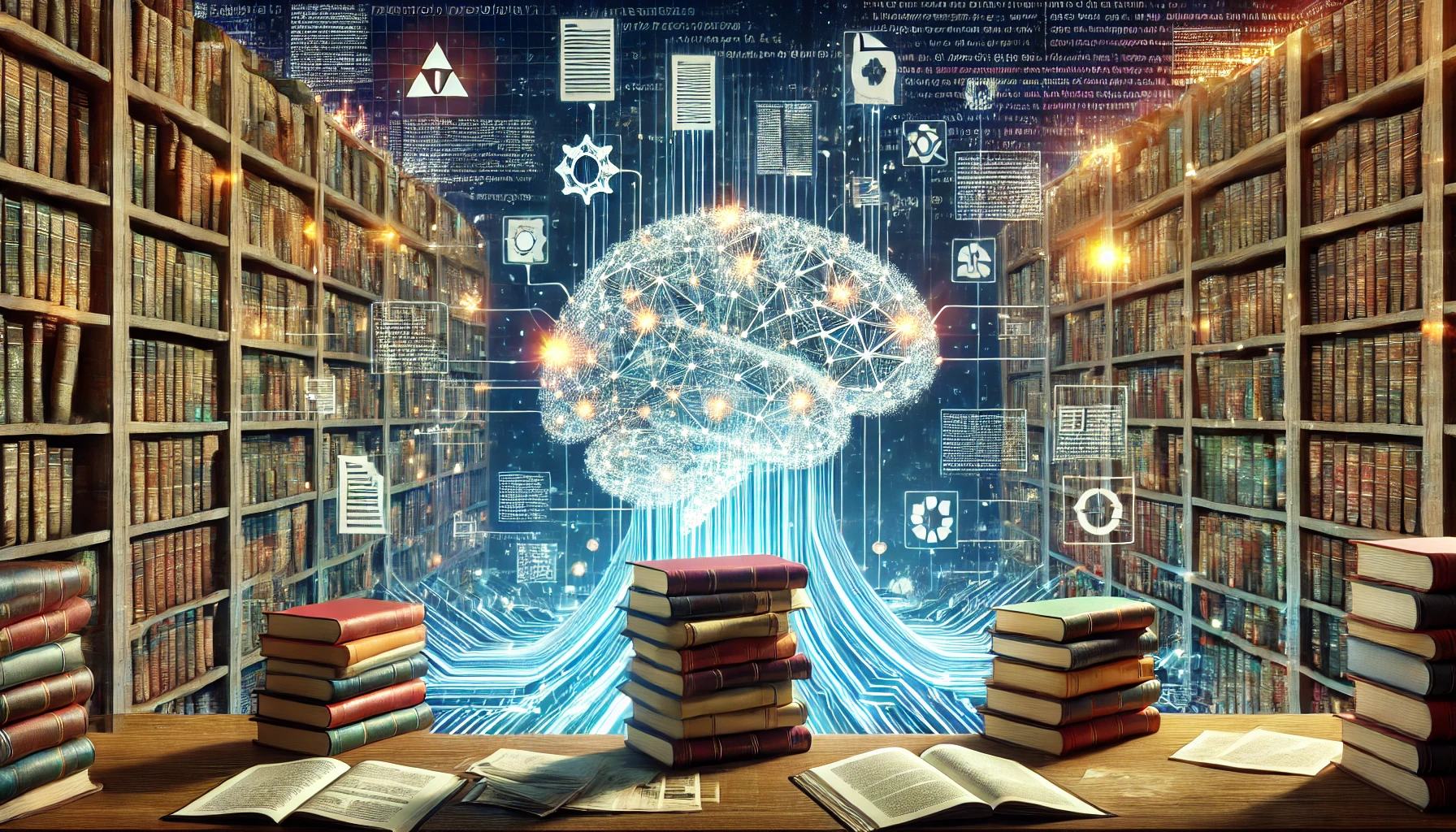

Deep Learning and Vocal Fold Analysis: The Role of the ...

Semantic segmentation of the glottal area from high-speed videoendoscopic (HSV) ...

CoordTok: A Scalable Video Tokenizer that Learns a Mapp...

Breaking down videos into smaller, meaningful parts for vision models remains ch...