Multilayer Perceptron, Explained: A Visual Guide with Mini 2D Dataset

CLASSIFICATION ALGORITHMDissecting the math (with visuals) of a tiny neural networkEver feel like neural networks are showing up everywhere? They’re in the news, in your phone, even in your social media feed. But let’s be honest — most of us have no clue how they actually work. All that fancy math and strange terms like “backpropagation”?Here’s a thought: what if we made things super simple? Let’s explore a Multilayer Perceptron (MLP) — the most basic type of neural network — to classify a simple 2D dataset using a small network, working with just a handful of data points.Through clear visuals and step-by-step explanations, you’ll see the math come to life, watching exactly how numbers and equations flow through the network and how learning really happens!All visuals: Author-created using Canva Pro. Optimized for mobile; may appear oversized on desktop.DefinitionA Multilayer Perceptron (MLP) is a type of neural network that uses layers of connected nodes to learn patterns. It gets its name from having multiple layers — typically an input layer, one or more middle (hidden) layers, and an output layer.Each node connects to all nodes in the next layer. When the network learns, it adjusts the strength of these connections based on training examples. For instance, if certain connections lead to correct predictions, they become stronger. If they lead to mistakes, they become weaker.This way of learning through examples helps the network recognize patterns and make predictions about new situations it hasn’t seen before.MLPs are considered fundamental in the field of neural networks and deep learning because they can handle complex problems that simpler methods struggle with.???? Dataset UsedTo understand how MLPs work, let’s start with a simple example: a mini 2D dataset with just a few samples. We’ll use the same dataset from our previous article to keep things manageable.Columns: Temperature (0–3), Humidity (0–3), Play Golf (Yes/No). The training dataset has 2 dimensions and 8 samples.Rather than jumping straight into training, let’s try to understand the key pieces that make up a neural network and how they work together.Step 0: Network StructureFirst, let’s look at the parts of our network:Node (Neuron)We begin with the basic structure of a neural network. This structure is composed of many individual units called nodes or neurons.This neural network has 8 nodes.These nodes are organized into groups called layers to work together:Input layerThe input layer is where we start. It takes in our raw data, and the number of nodes here matches how many features we have.The input layer has 2 nodes, one for each feature.Hidden layerNext come the hidden layers. We can have one or more of these layers, and we can choose how many nodes each one has. Often, we use fewer nodes in each layer as we go deeper.This neural network has 2 hidden layers with 3 and 2 nodes, respectively.Output layerThe last layer gives us our final answer. The number of nodes in our output layer depends on our task: for binary classification or regression, we might have just one output node, while for multi-class problems, we’d have one node per class.This neural network has output layer with only 1 node (because binary).WeightsThe nodes connect to each other using weights — numbers that control how much each piece of information matters. Each connection between nodes has its own weight. This means we need lots of weights: every node in one layer connects to every node in the next layer.This neural network has total of 14 weights.BiasesAlong with weights, each node also has a bias — an extra number that helps it make better decisions. While weights control connections between nodes, biases help each node adjust its output.This neural network has 6 bias values.The Neural NetworkIn summary, we will use and train this neural network:Our network consists of 4 layers: 1 input layer (2 nodes), 2 hidden layers (3 nodes & 2 nodes), and 1 output layer (1 node). This creates a 2–3–2–1 architecture.Let’s look at this new diagram that shows our network from top to bottom. I’ve updated it to make the math easier to follow: information starts at the top nodes and flows down through the layers until it reaches the final answer at the bottom.Now that we understand how our network is built, let’s see how information moves through it. This is called the forward pass.Step 1: Forward PassLet’s see how our network turns input into output, step by step:Weight initializationBefore our network can start learning, we need to give each weight a starting value. We choose small random numbers between -1 and 1. Starting with random numbers helps our network learn without any early preferences or patterns.All the weights are chosen randomly from a [-0.5, 0.5] range.Weighted sumEach node processes incoming data in two steps. First, it multiplies each input by its weight and adds all these numbers together. Then it adds one more number — the bias — to complete the calculation. The

CLASSIFICATION ALGORITHM

Dissecting the math (with visuals) of a tiny neural network

Ever feel like neural networks are showing up everywhere? They’re in the news, in your phone, even in your social media feed. But let’s be honest — most of us have no clue how they actually work. All that fancy math and strange terms like “backpropagation”?

Here’s a thought: what if we made things super simple? Let’s explore a Multilayer Perceptron (MLP) — the most basic type of neural network — to classify a simple 2D dataset using a small network, working with just a handful of data points.

Through clear visuals and step-by-step explanations, you’ll see the math come to life, watching exactly how numbers and equations flow through the network and how learning really happens!

Definition

A Multilayer Perceptron (MLP) is a type of neural network that uses layers of connected nodes to learn patterns. It gets its name from having multiple layers — typically an input layer, one or more middle (hidden) layers, and an output layer.

Each node connects to all nodes in the next layer. When the network learns, it adjusts the strength of these connections based on training examples. For instance, if certain connections lead to correct predictions, they become stronger. If they lead to mistakes, they become weaker.

This way of learning through examples helps the network recognize patterns and make predictions about new situations it hasn’t seen before.

???? Dataset Used

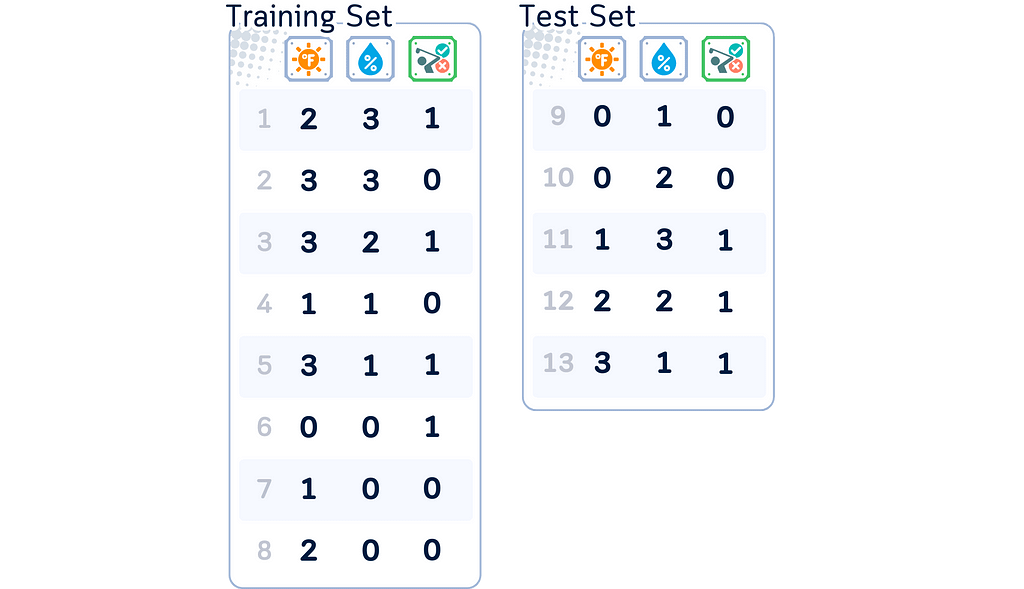

To understand how MLPs work, let’s start with a simple example: a mini 2D dataset with just a few samples. We’ll use the same dataset from our previous article to keep things manageable.

Rather than jumping straight into training, let’s try to understand the key pieces that make up a neural network and how they work together.

Step 0: Network Structure

First, let’s look at the parts of our network:

Node (Neuron)

We begin with the basic structure of a neural network. This structure is composed of many individual units called nodes or neurons.

These nodes are organized into groups called layers to work together:

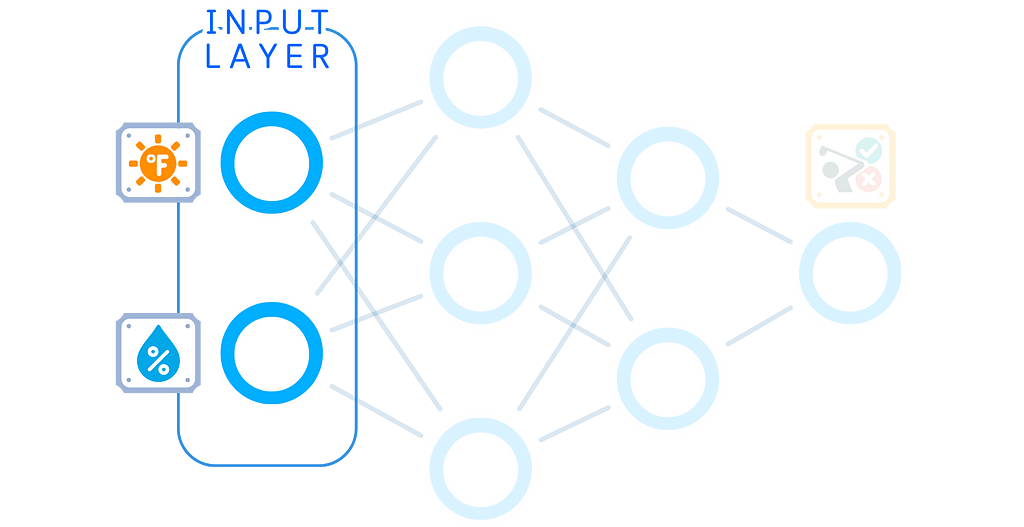

Input layer

The input layer is where we start. It takes in our raw data, and the number of nodes here matches how many features we have.

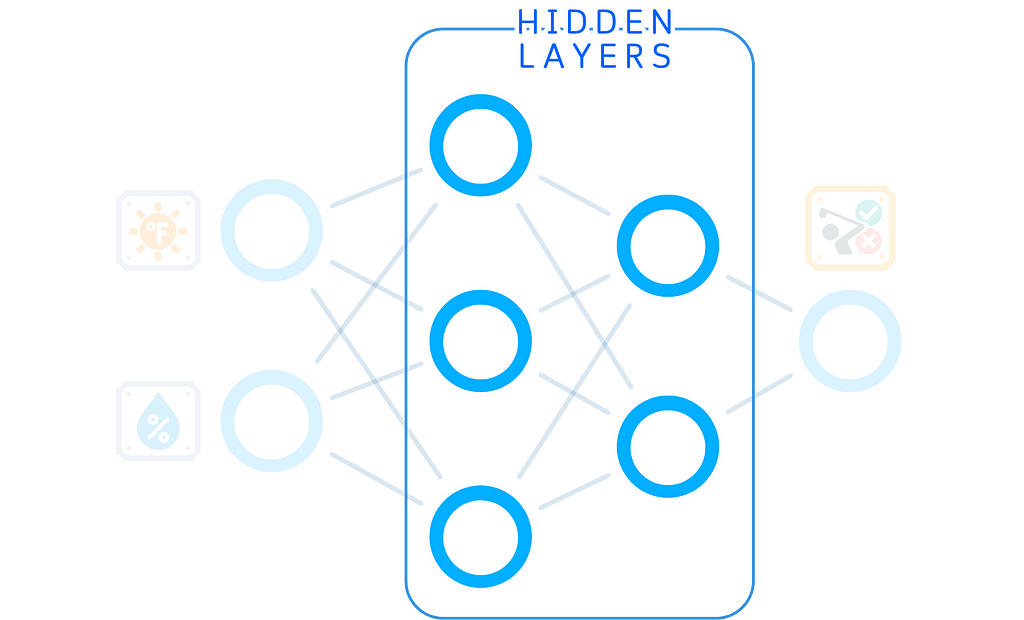

Hidden layer

Next come the hidden layers. We can have one or more of these layers, and we can choose how many nodes each one has. Often, we use fewer nodes in each layer as we go deeper.

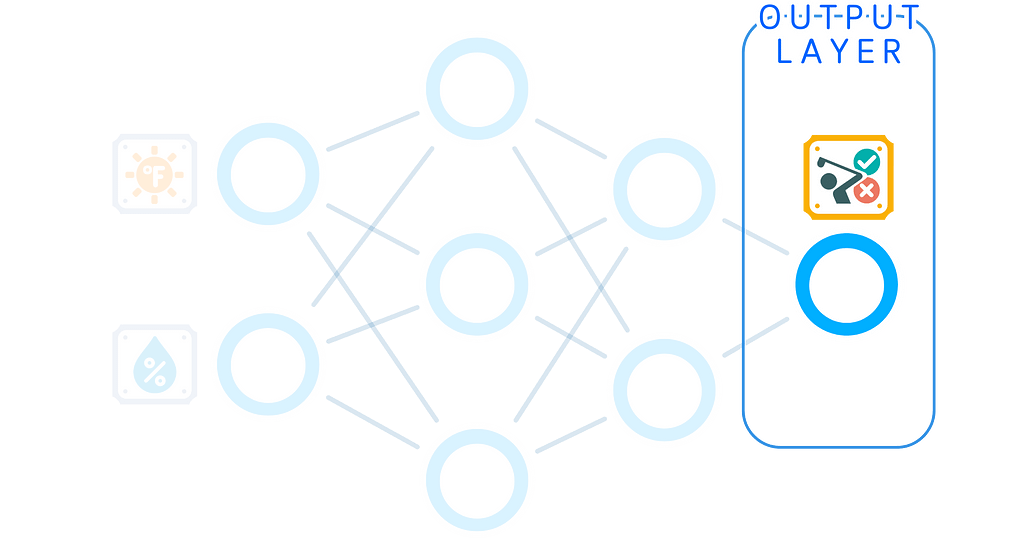

Output layer

The last layer gives us our final answer. The number of nodes in our output layer depends on our task: for binary classification or regression, we might have just one output node, while for multi-class problems, we’d have one node per class.

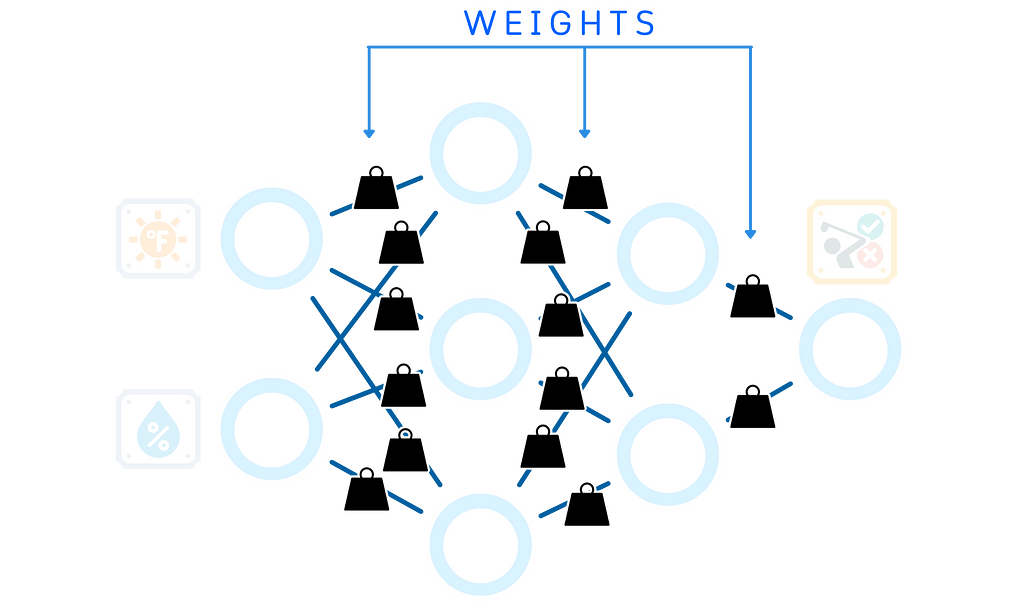

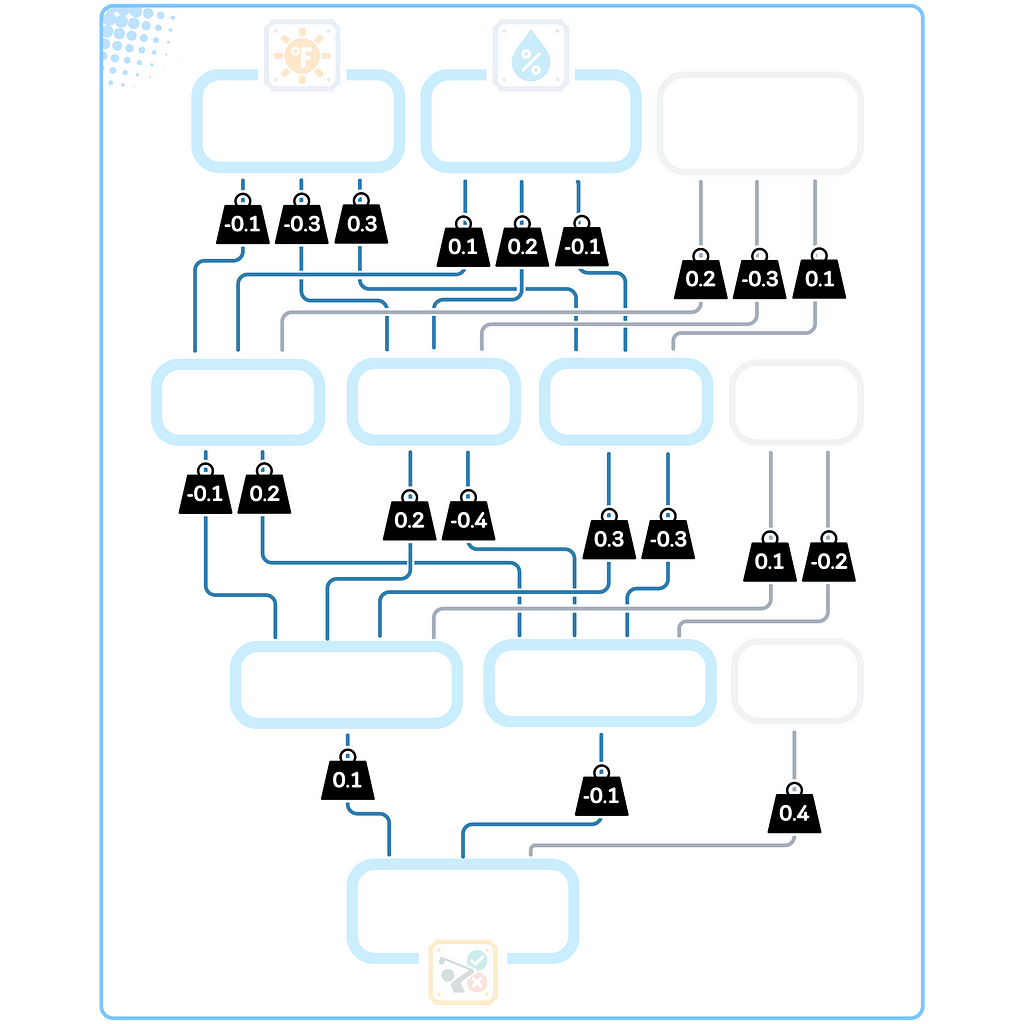

Weights

The nodes connect to each other using weights — numbers that control how much each piece of information matters. Each connection between nodes has its own weight. This means we need lots of weights: every node in one layer connects to every node in the next layer.

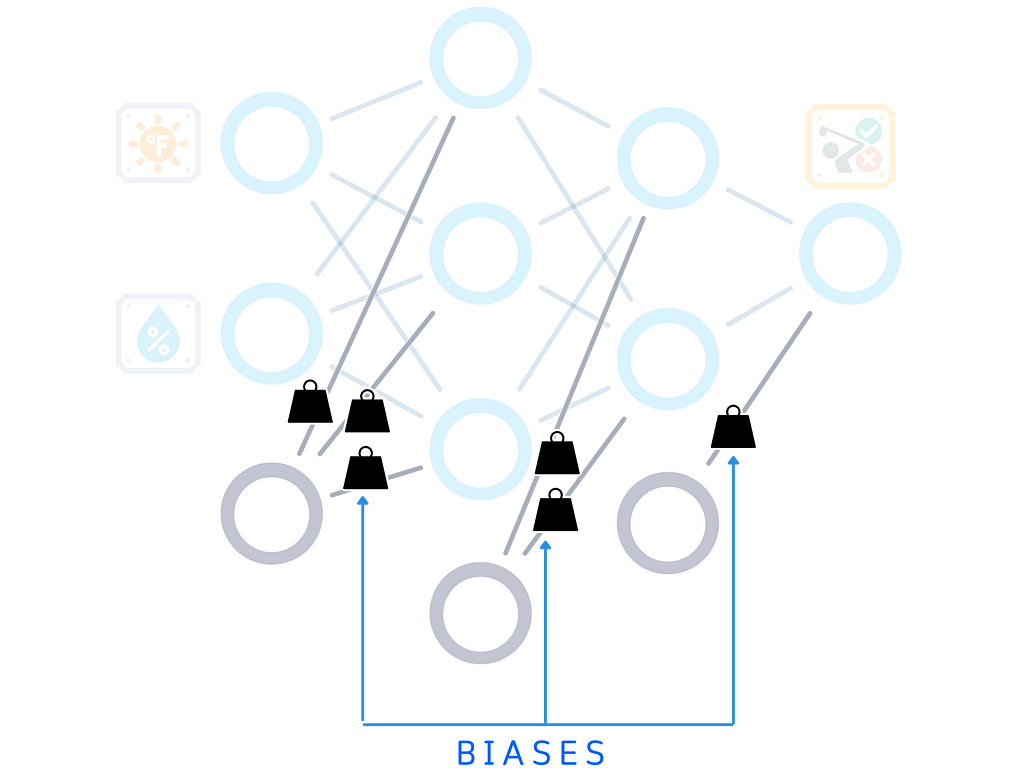

Biases

Along with weights, each node also has a bias — an extra number that helps it make better decisions. While weights control connections between nodes, biases help each node adjust its output.

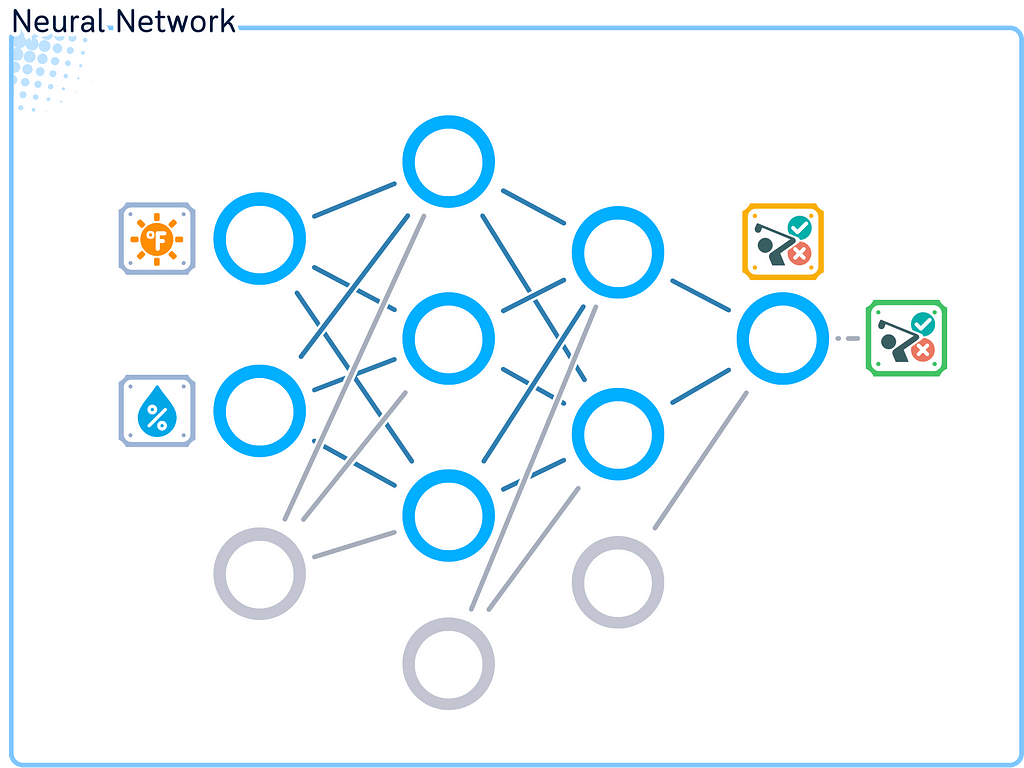

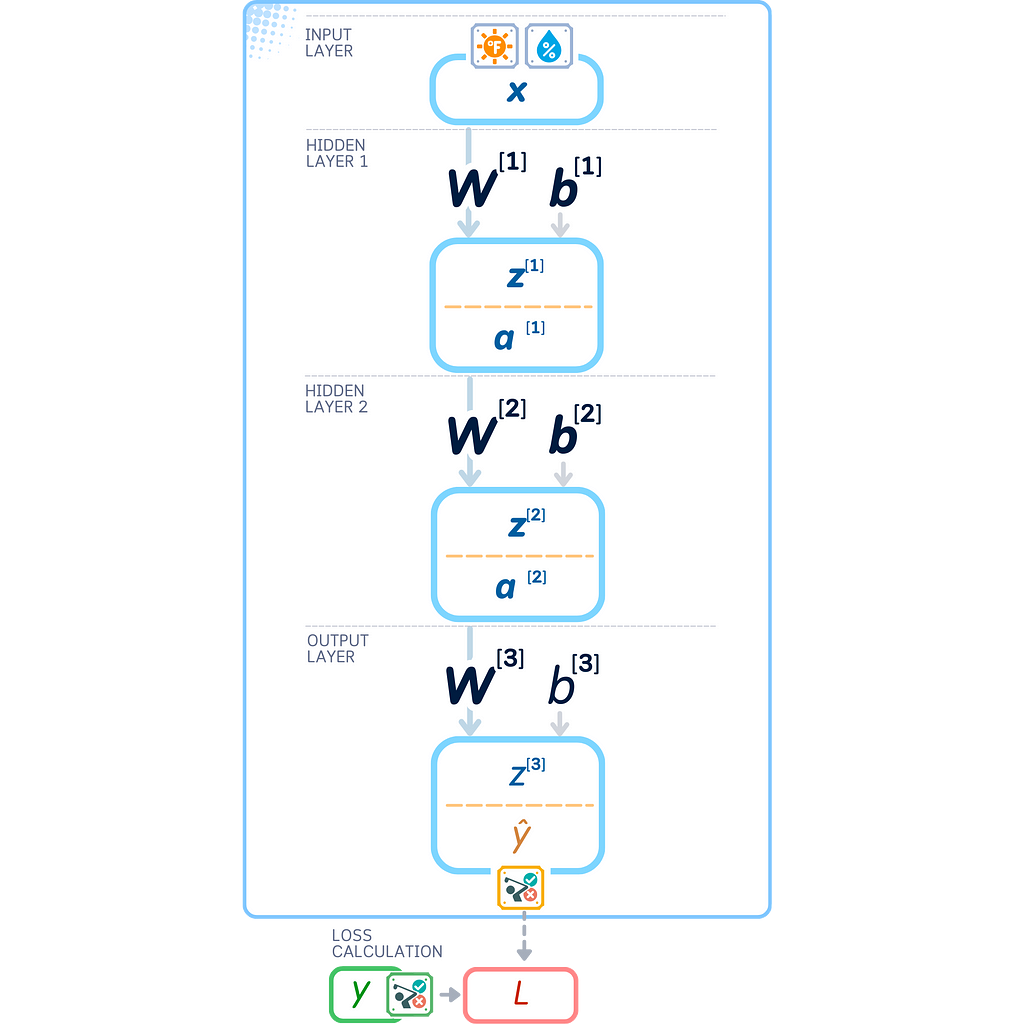

The Neural Network

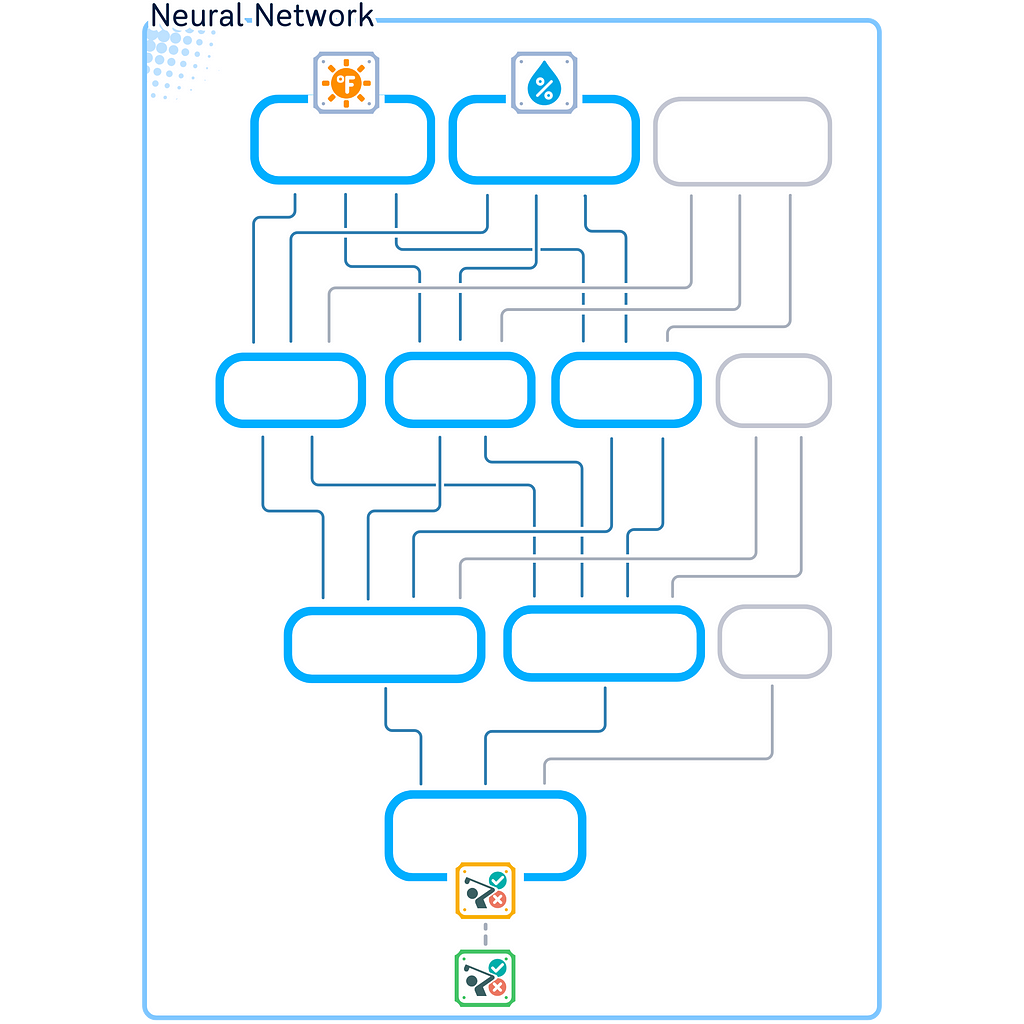

In summary, we will use and train this neural network:

Let’s look at this new diagram that shows our network from top to bottom. I’ve updated it to make the math easier to follow: information starts at the top nodes and flows down through the layers until it reaches the final answer at the bottom.

Now that we understand how our network is built, let’s see how information moves through it. This is called the forward pass.

Step 1: Forward Pass

Let’s see how our network turns input into output, step by step:

Weight initialization

Before our network can start learning, we need to give each weight a starting value. We choose small random numbers between -1 and 1. Starting with random numbers helps our network learn without any early preferences or patterns.

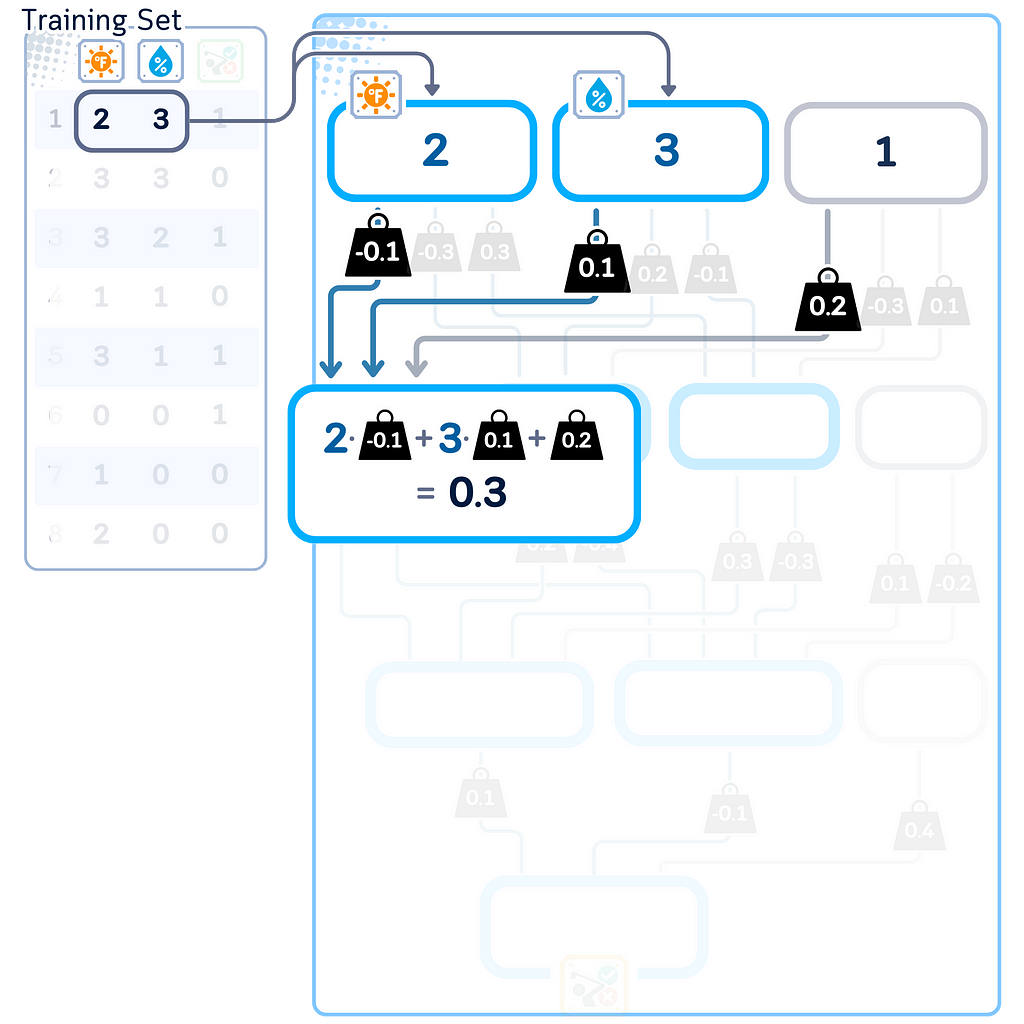

Weighted sum

Each node processes incoming data in two steps. First, it multiplies each input by its weight and adds all these numbers together. Then it adds one more number — the bias — to complete the calculation. The bias is essentially a weight with a constant input of 1.

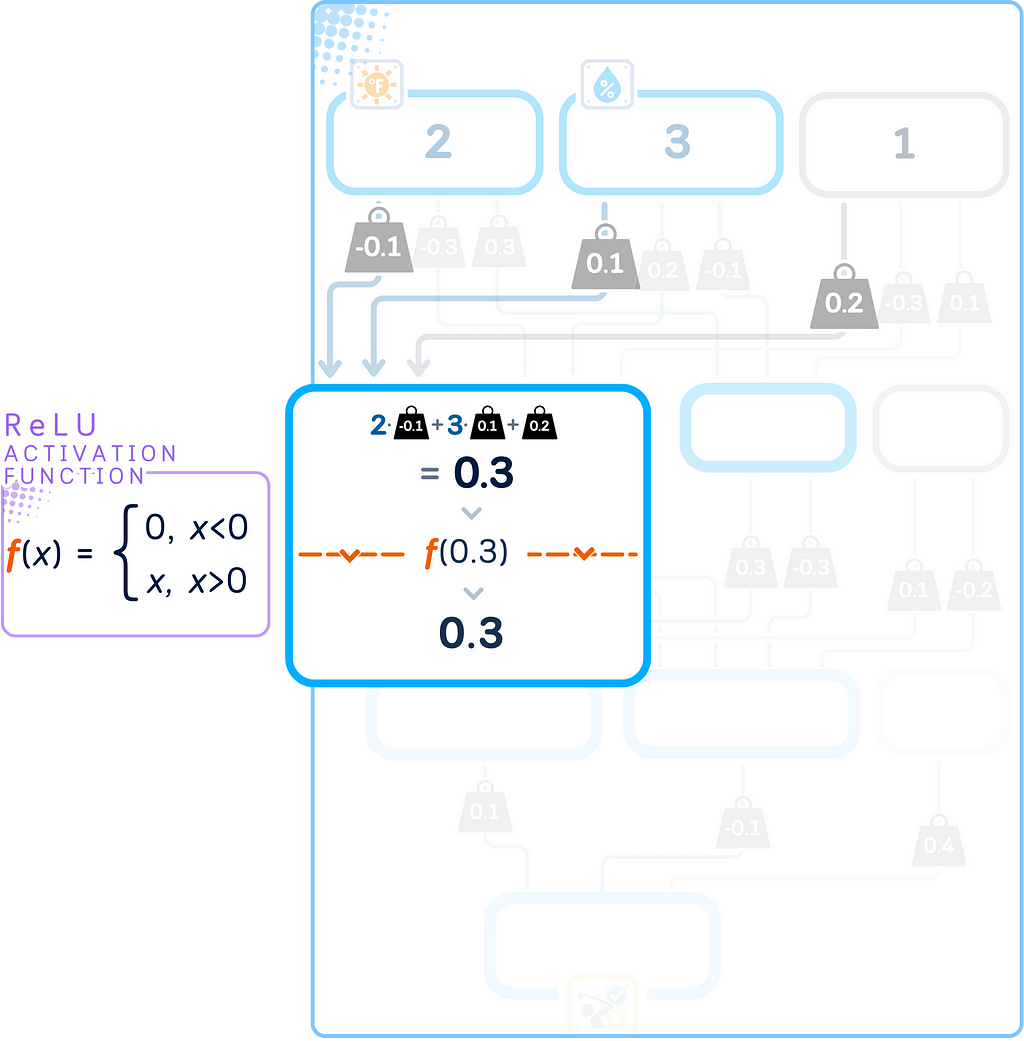

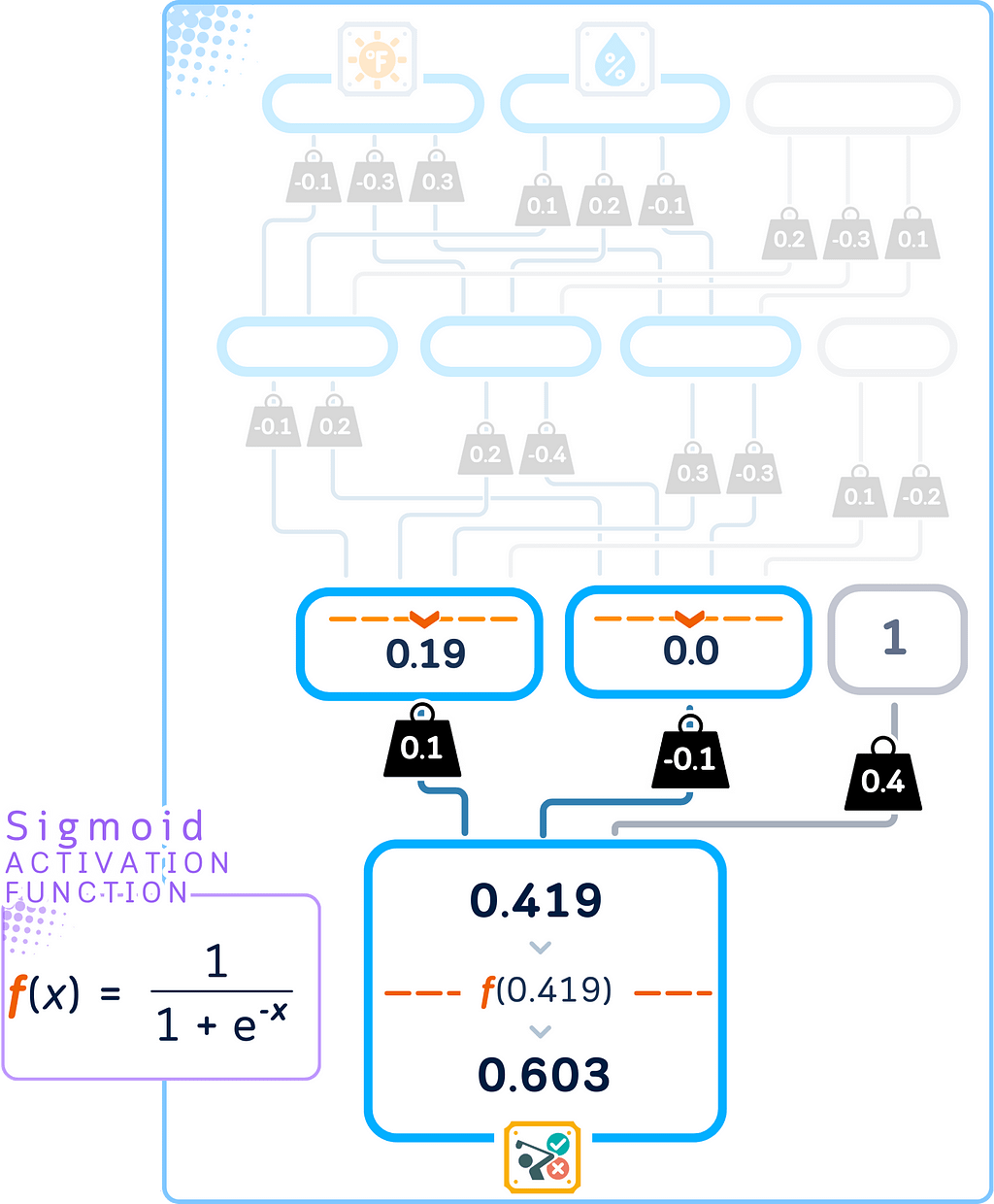

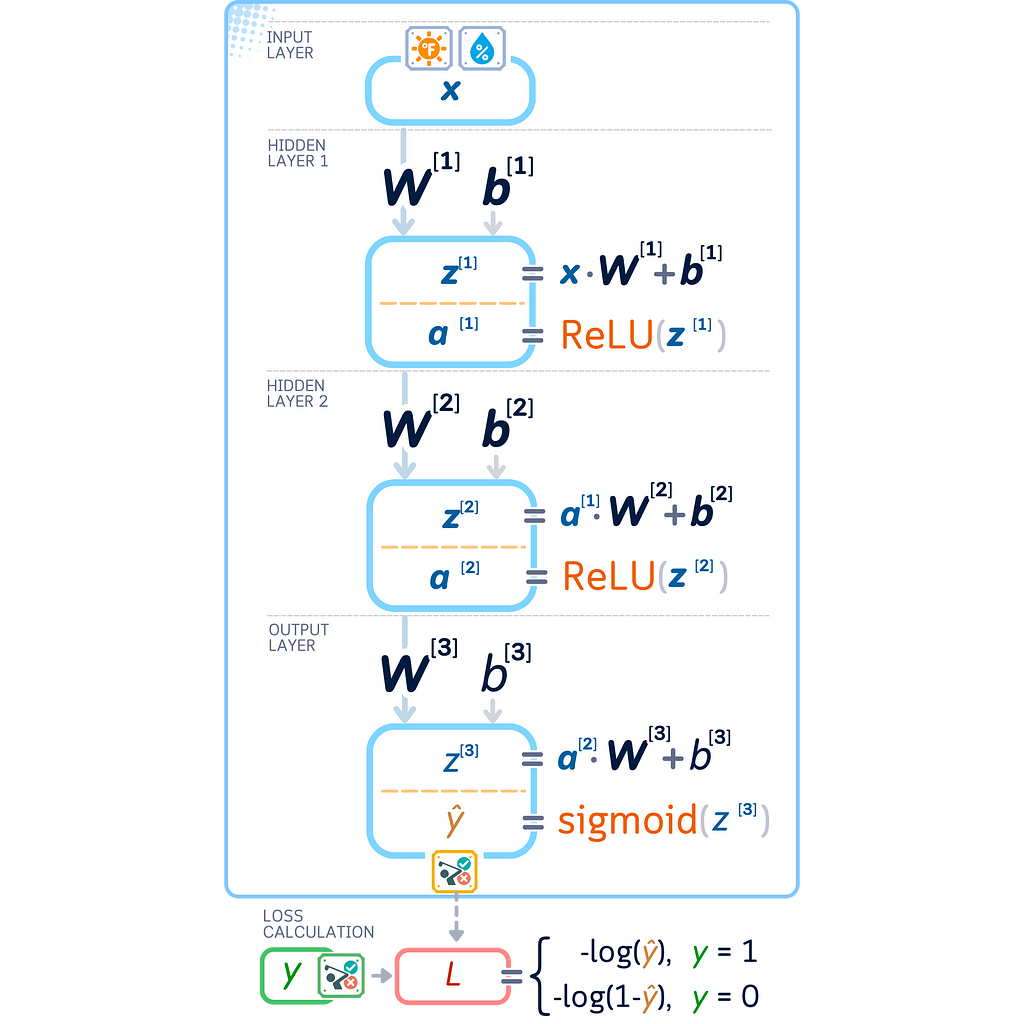

Activation function

Each node takes its weighted sum and runs it through an activation function to produce its output. The activation function helps our network learn complicated patterns by introducing non-linear behavior.

In our hidden layers, we use the ReLU function (Rectified Linear Unit). ReLU is straightforward: if a number is positive, it stays the same; if it’s negative, it becomes zero.

Layer-by-layer computation

This two-step process (weighted sums and activation) happens in every layer, one after another. Each layer’s calculations help transform our input data step by step into our final prediction.

Output generation

The last layer creates our network’s final answer. For our yes/no classification task, we use a special activation function called sigmoid in this layer.

The sigmoid function turns any number into a value between 0 and 1. This makes it perfect for yes/no decisions, as we can treat the output like a probability: closer to 1 means more likely ‘yes’, closer to 0 means more likely ‘no’.

This process of forward pass turns our input into a prediction between 0 and 1. But how good are these predictions? Next, we’ll measure how close our predictions are to the correct answers.

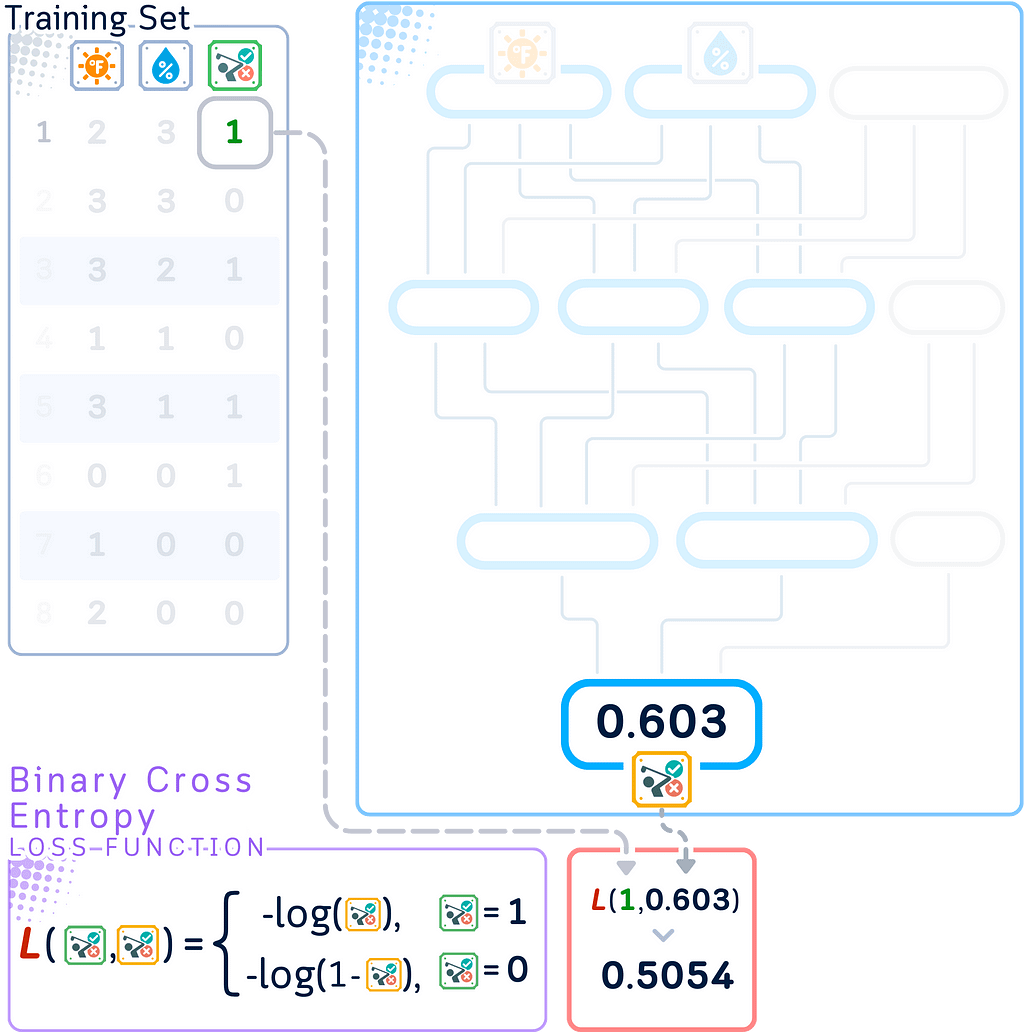

Step 2: Loss Calculation

Loss function

To check how well our network is doing, we measure the difference between its predictions and the correct answers. For binary classification, we use a method called binary cross-entropy that shows us how far off our predictions are from the true values.

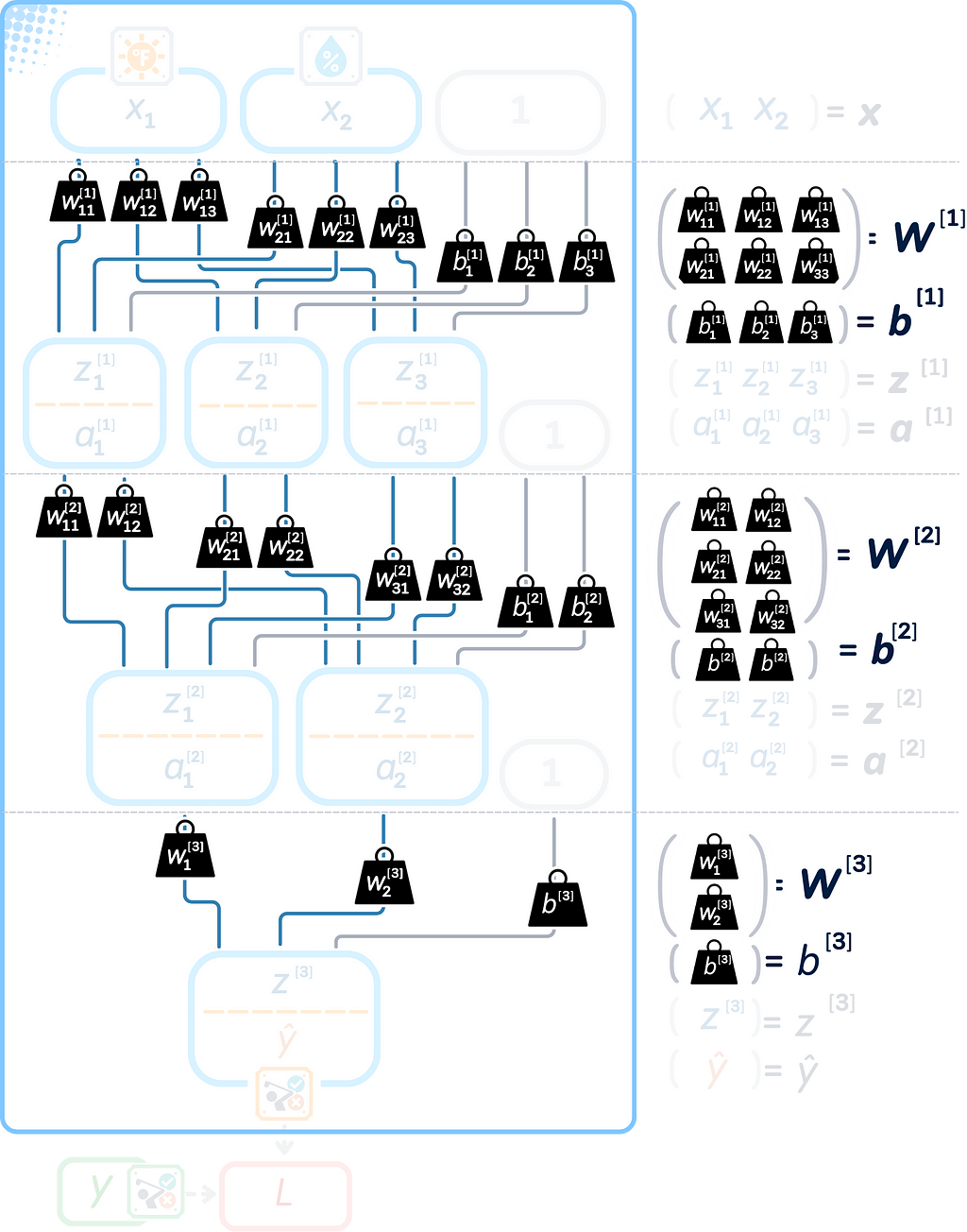

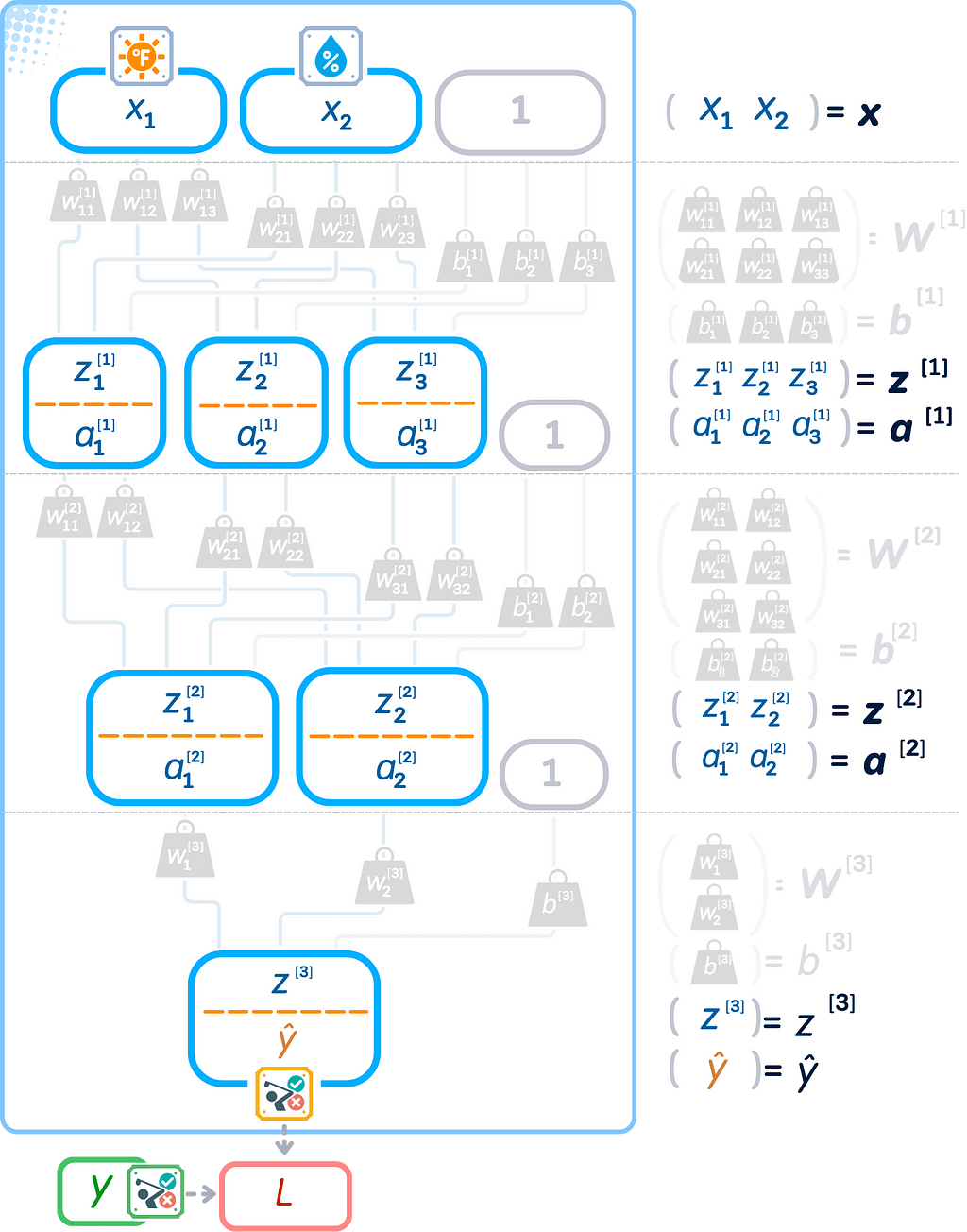

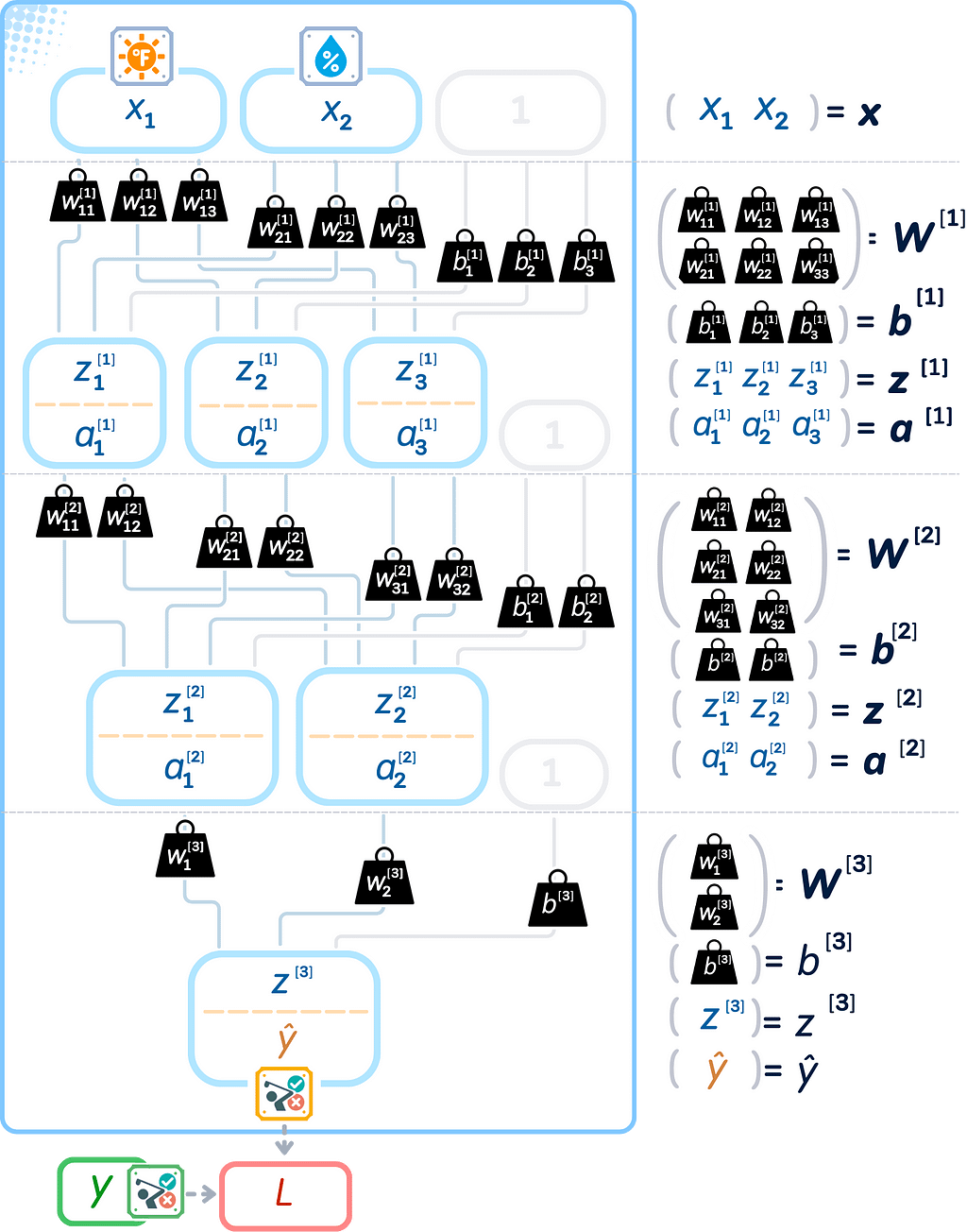

Math Notation in Neural Network

To improve our network’s performance, we’ll need to use some math symbols. Let’s define what each symbol means before we continue:

Weights and Bias

Weights are represented as matrices and biases as vectors (or 1-dimensional matrices). The bracket notation [1] indicates the layer number.

Input, Output, Weighted Sum, and Value after Activation

The values within nodes can be represented as vectors, forming a consistent mathematical framework.

All Together

These math symbols help us write exactly what our network does:

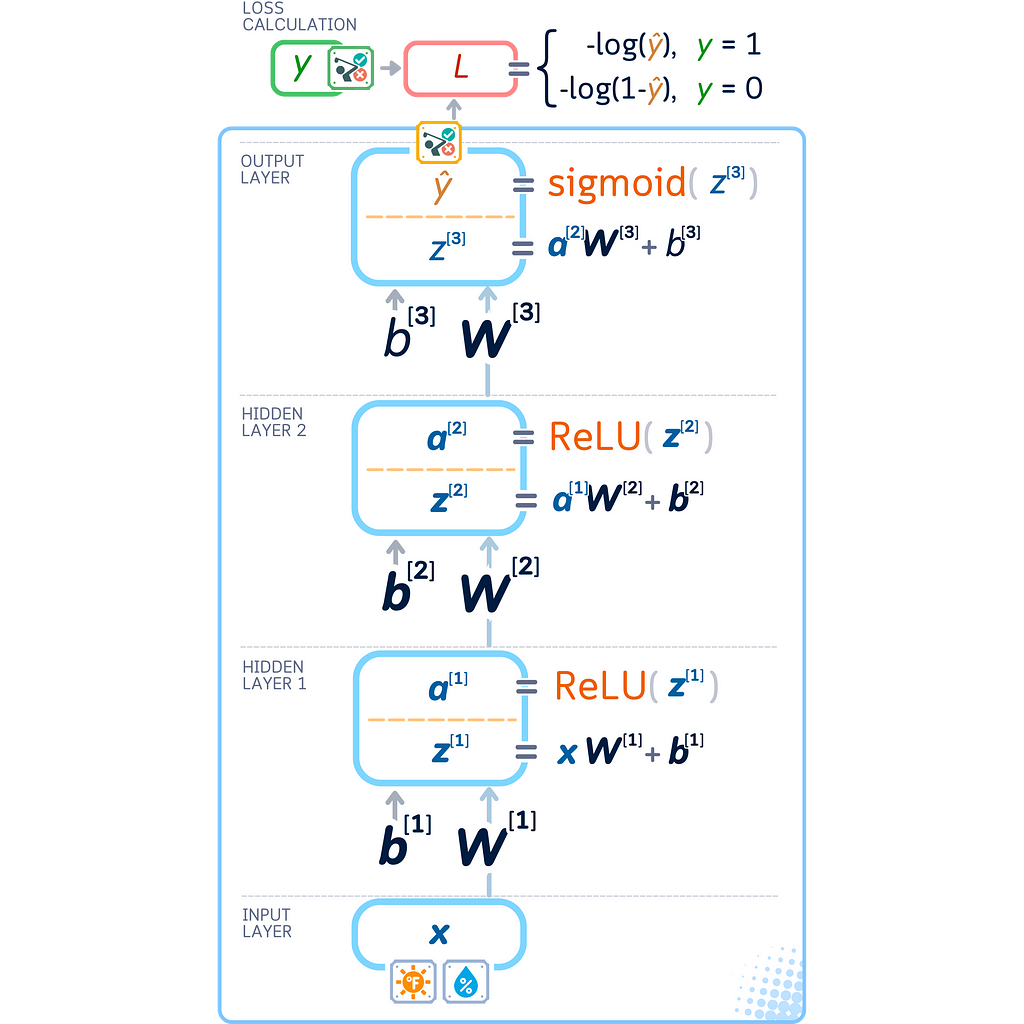

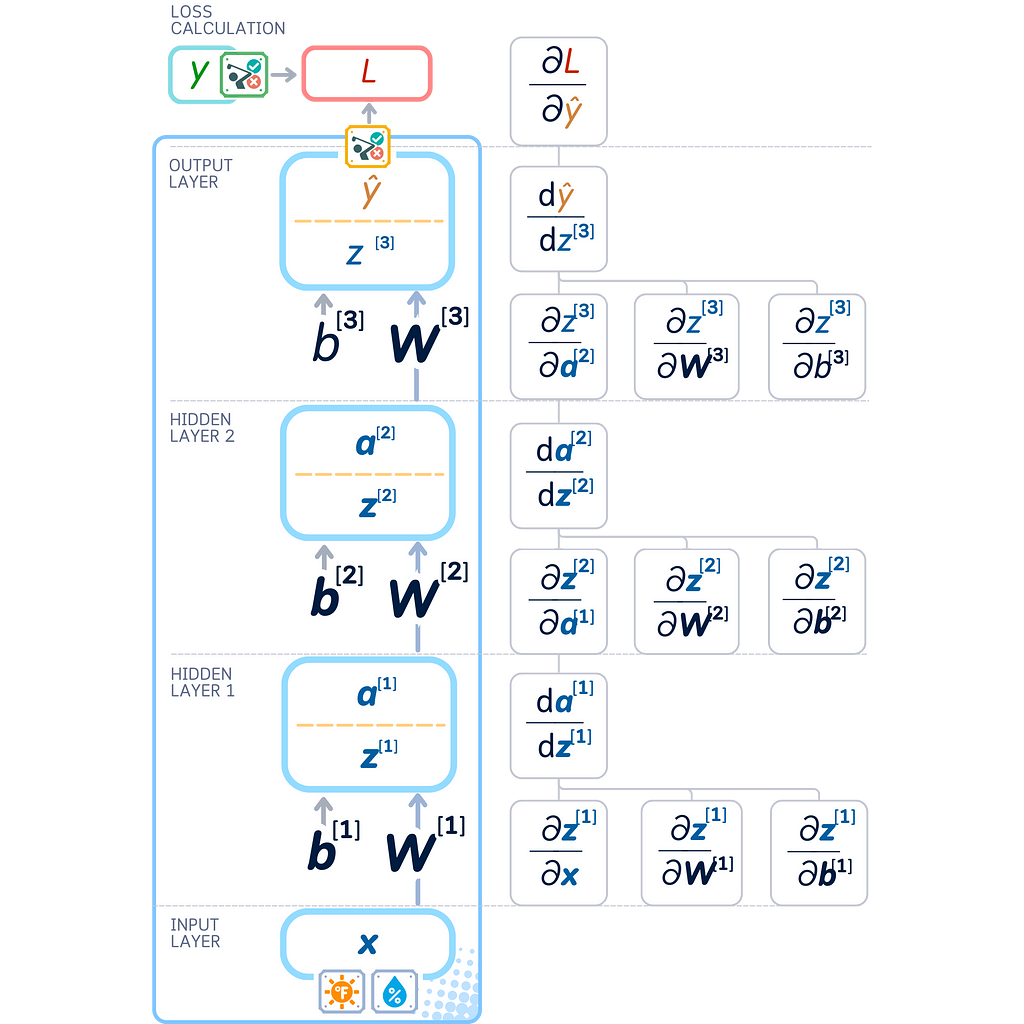

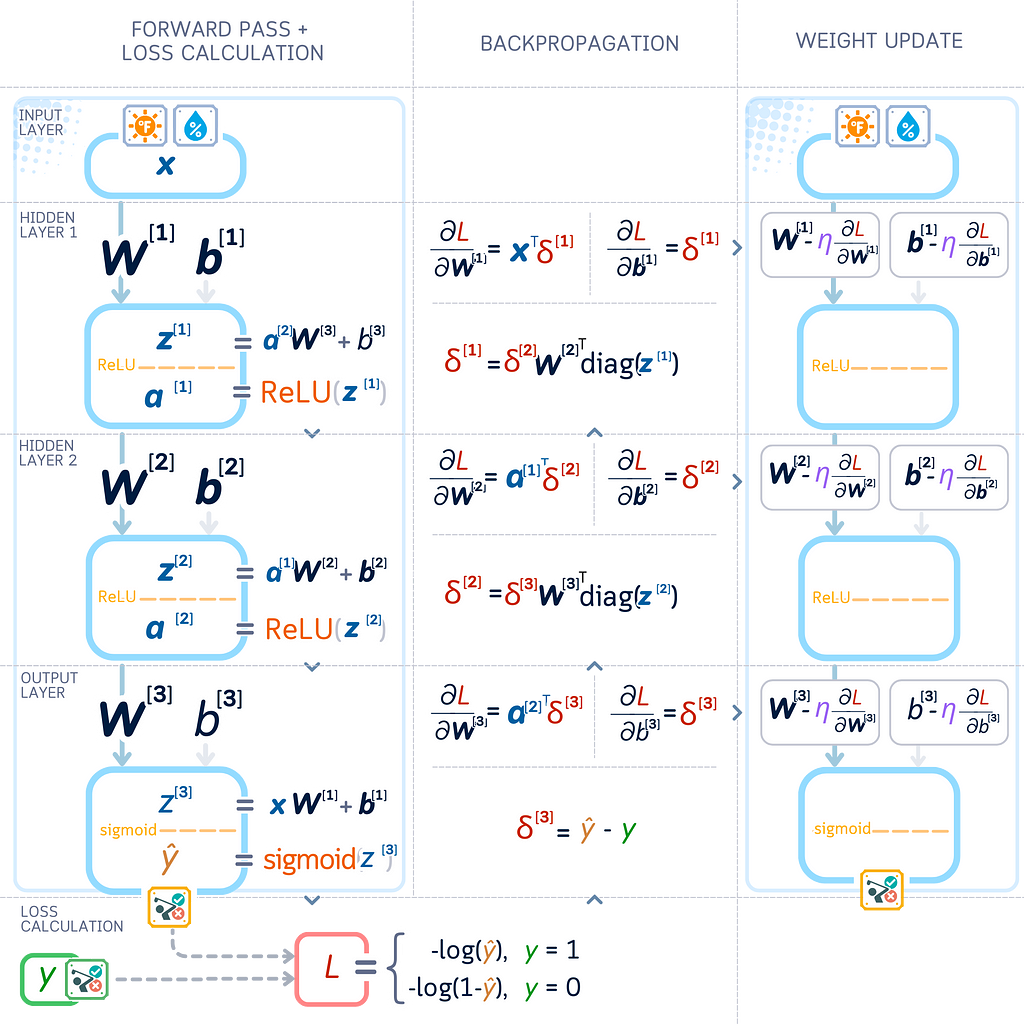

Let’s look at a diagram that shows all the math happening in our network. Each layer has:

- Weights (W) and biases (b) that connect layers

- Values before activation (z)

- Values after activation (a)

- Final prediction (ŷ) and loss (L) at the end

Let’s see exactly what happens at each layer:

First hidden layer:

· Takes our input x, multiplies it by weights W[1], adds bias b[1] to get z[1]

· Applies ReLU to z[1] to get output a[1]

Second hidden layer:

· Takes a[1], multiplies by weights W[2], adds bias b[2] to get z[2]

· Applies ReLU to z[2] to get output a[2]

Output layer:

· Takes a[2], multiplies by weights W[3], adds bias b[3] to get z[3]

· Applies sigmoid to z[3] to get our final prediction ŷ

Now that we see all the math in our network, how do we improve these numbers to get better predictions? This is where backpropagation comes in — it shows us how to adjust our weights and biases to make fewer mistakes.

Step 3: Backpropagation

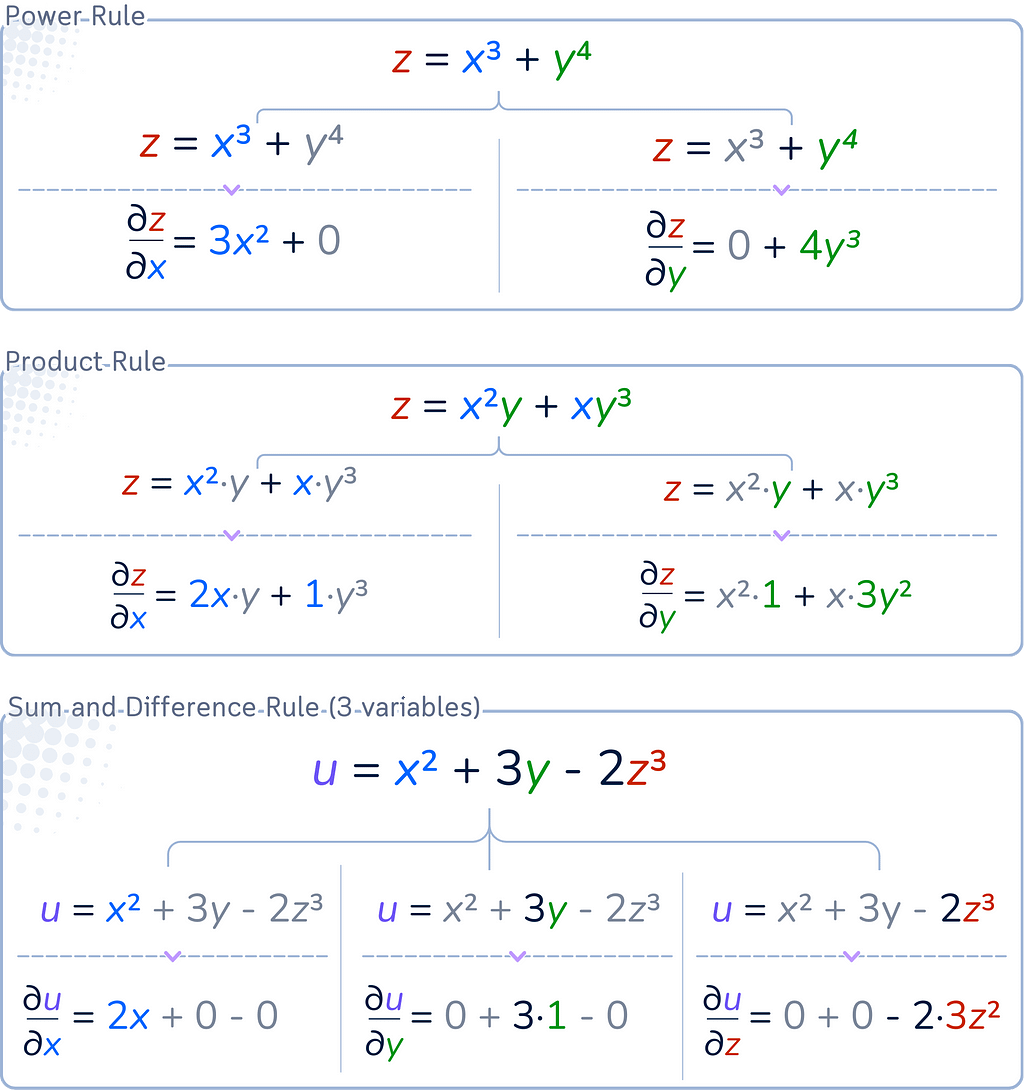

Before we see how to improve our network, let’s quickly review some math tools we’ll need:

Derivative

To optimize our neural network, we use gradients — a concept closely related to derivatives. Let’s review some fundamental derivative rules:

Partial Derivative

Let’s clarify the distinction between regular and partial derivatives:

Regular Derivative:

· Used when a function has only one variable

· Shows how much the function changes when its only variable changes

· Written as df/dx

Partial Derivative:

· Used when a function has more than one variable

· Shows how much the function changes when one variable changes, while keeping the other variables the same (as constant).

· Written as ∂f/∂x

Gradient Calculation and Backpropagation

Returning to our neural network, we need to determine how to adjust each weight and bias to minimize the error. We can do this using a method called backpropagation, which shows us how changing each value affects our network’s errors.

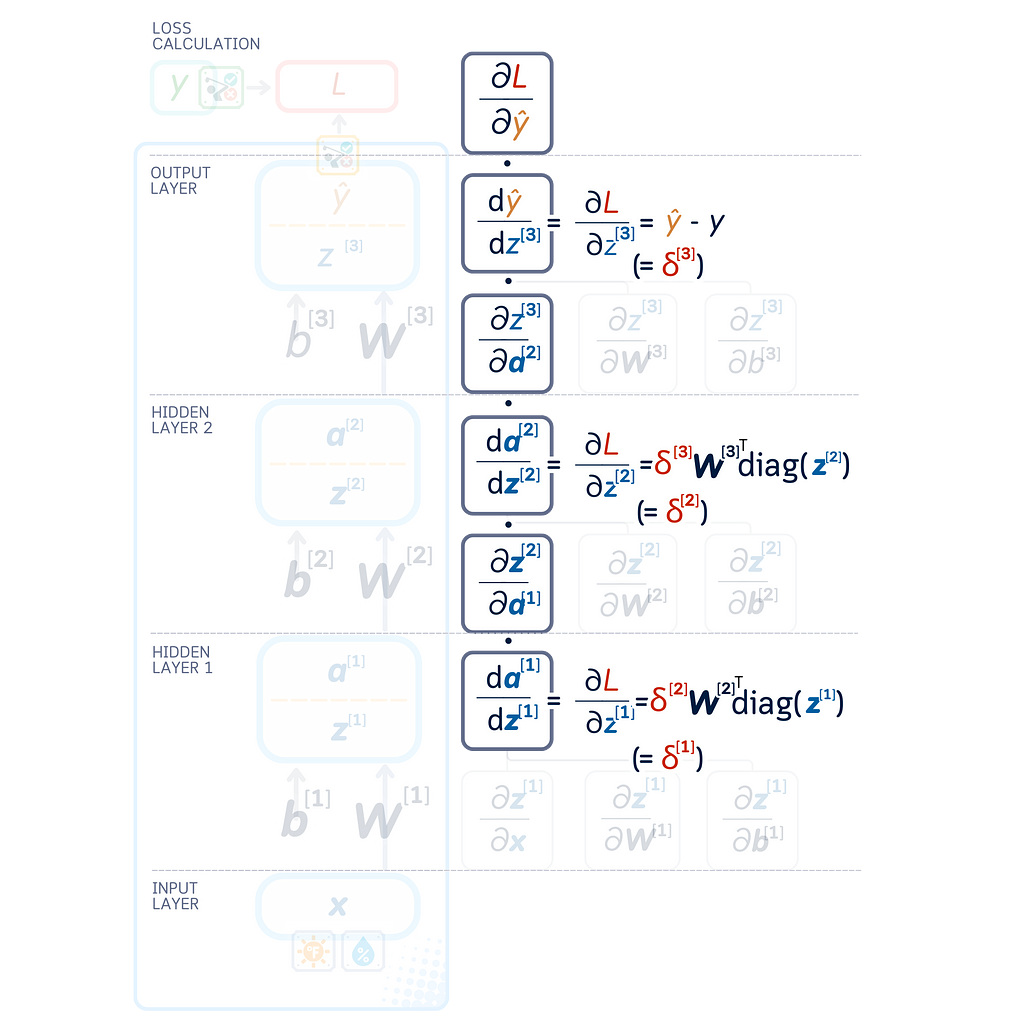

Since backpropagation works backwards through our network, let’s flip our diagram upside down to see how this works.

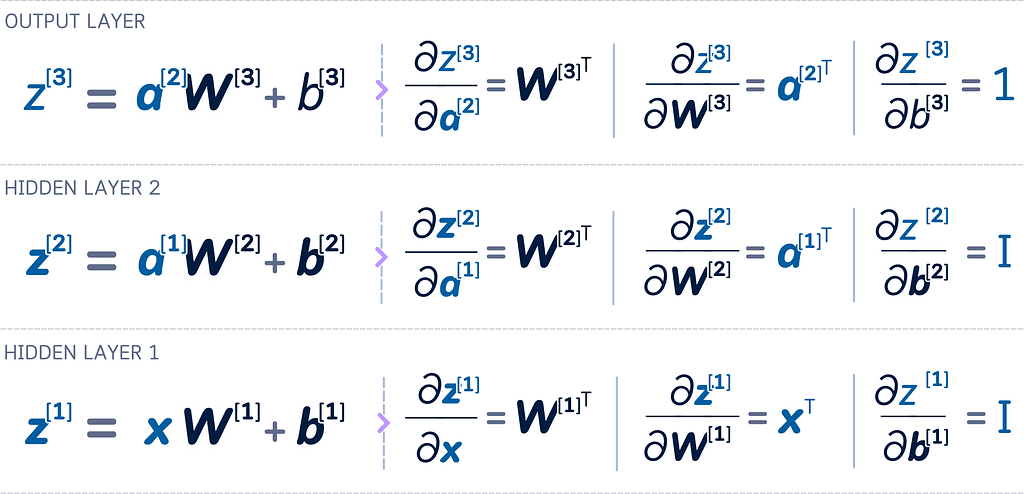

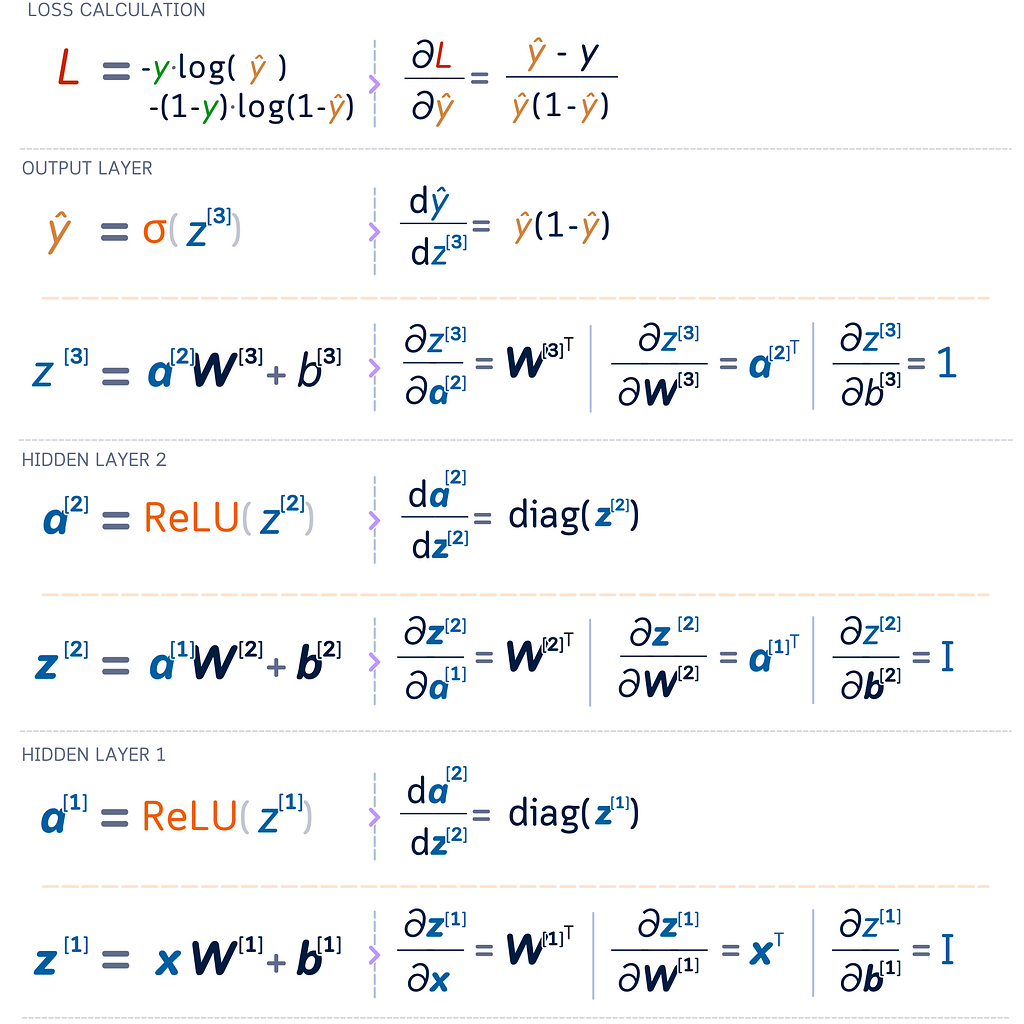

Matrix Rules for Networks

Since our network uses matrices (groups of weights and biases), we need special rules to calculate how changes affect our results. Here are two key matrix rules. For vectors v, u (size 1 × n) and matrices W, X (size n × n):

- Sum Rule:

∂(W + X)/∂W = I (Identity matrix, size n × n)

∂(u + v)/∂v = I (Identity matrix, size n × n) - Matrix-Vector Product Rule:

∂(vW)/∂W = vᵀ

∂(vW)/∂v = Wᵀ

Using these rules, we obtain:

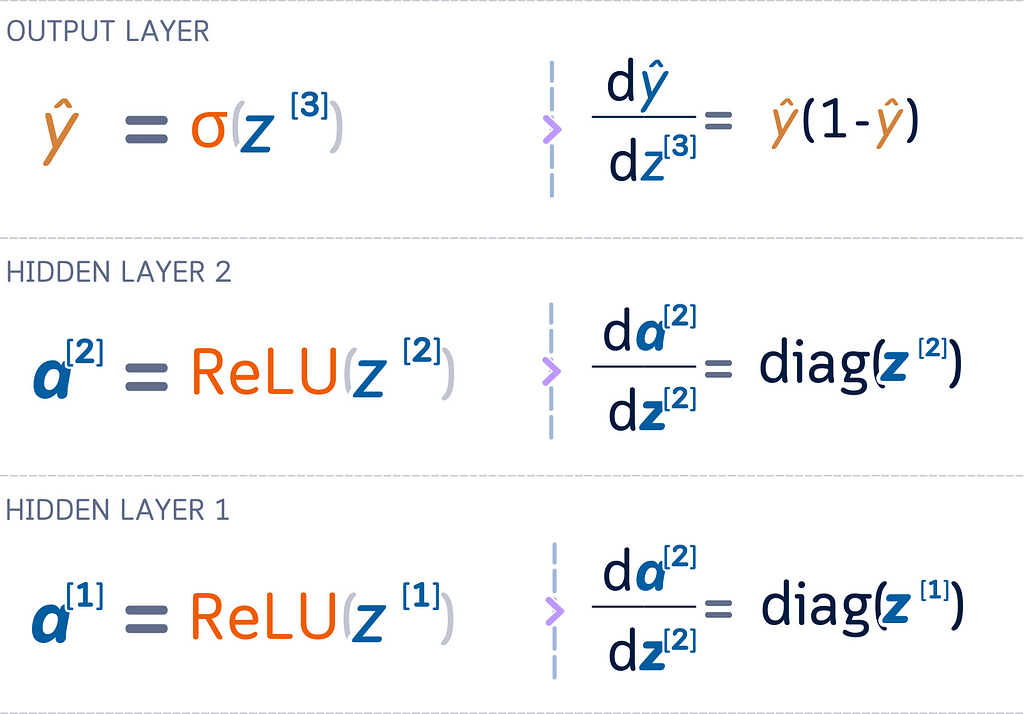

Activation Function Derivatives

Derivatives of ReLU

For vectors a and z (size 1 × n), where a = ReLU(z):

∂a/∂z = diag(z > 0)

Creates a diagonal matrix that shows: 1 if input was positive, 0 if input was zero or negative.

Derivatives of Sigmoid

For a = σ(z), where σ is the sigmoid function:

∂a/∂z = a ⊙ (1 - a)

This multiplies elements directly (⊙ means multiply each position).

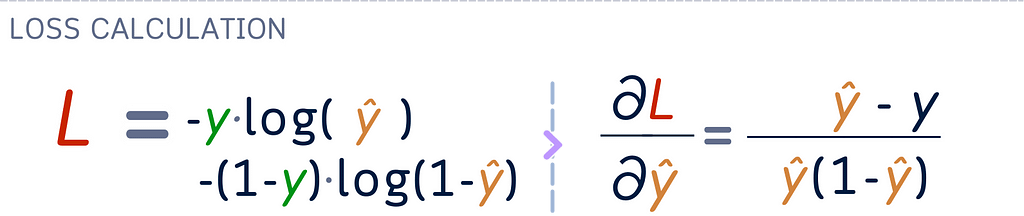

Binary Cross-Entropy Loss Derivative

For a single example with loss L = -[y log(ŷ) + (1-y) log(1-ŷ)]:

∂L/∂ŷ = -(y-ŷ) / [ŷ(1-ŷ)]

Up to this point, we can summarized all the partial derivatives as follows:

The following image shows all the partial derivatives that we’ve obtained so far:

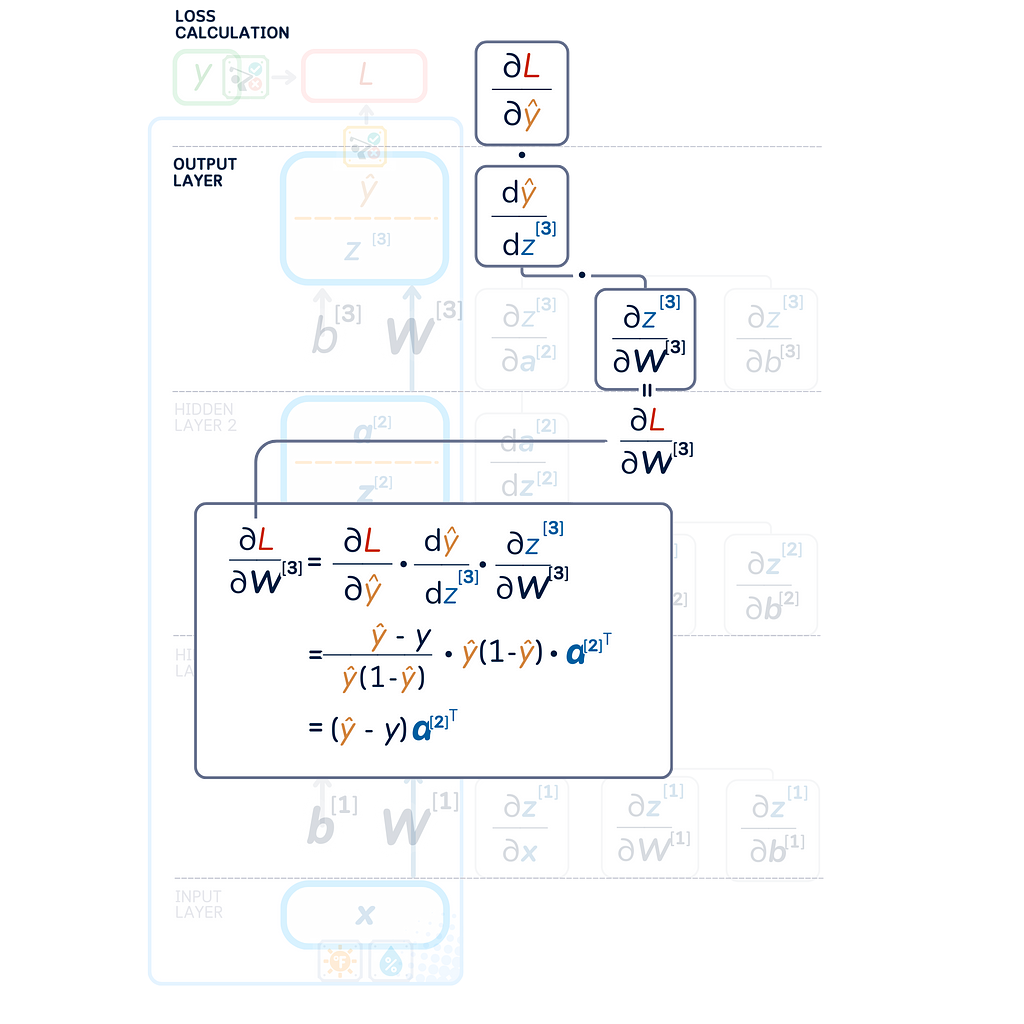

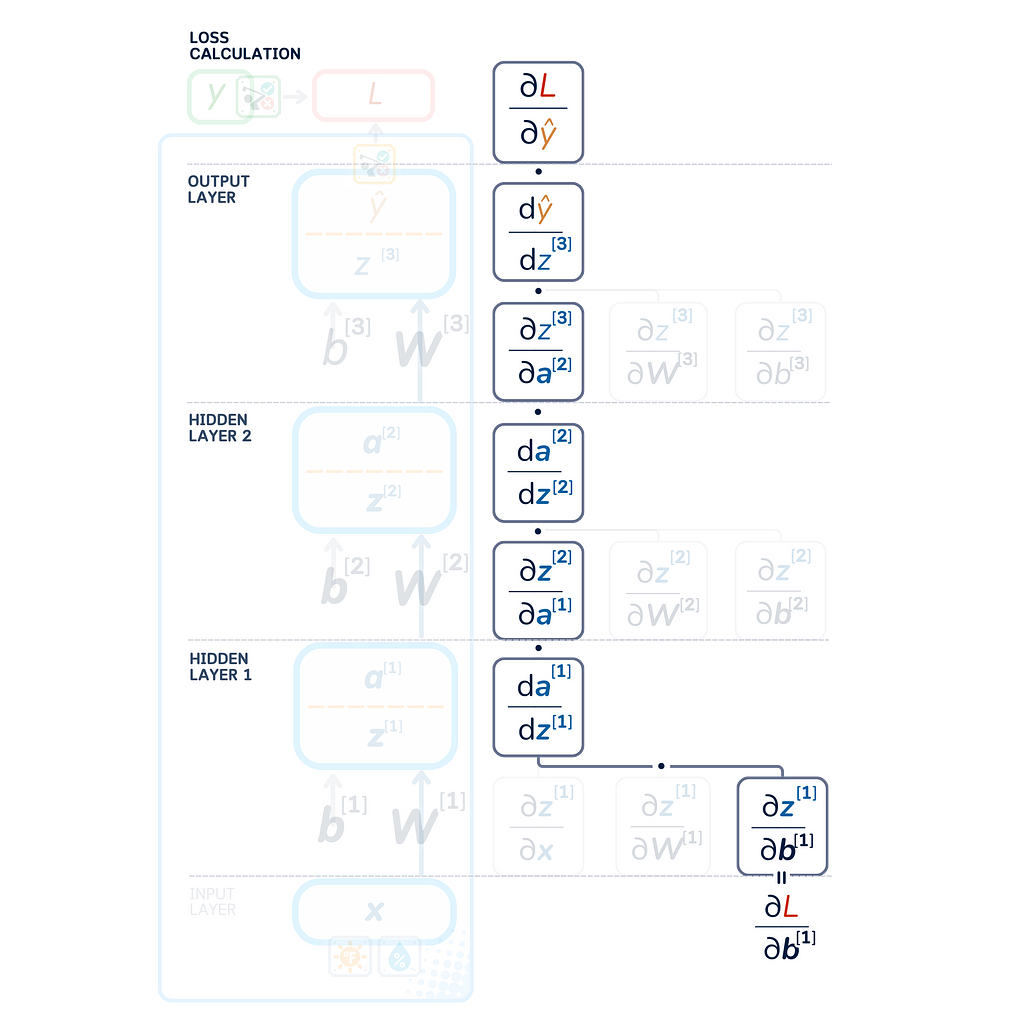

Chain Rule

In our network, changes flow through multiple steps: a weight affects its layer’s output, which affects the next layer, and so on until the final error. The chain rule tells us to multiply these step-by-step changes together to find how each weight and bias affects the final error.

Error Calculation

Rather than directly computing weight and bias derivatives, we first calculate layer errors ∂L/∂zˡ (the gradient with respect to pre-activation outputs). This makes it easier to then calculate how we should adjust the weights and biases in earlier layers.

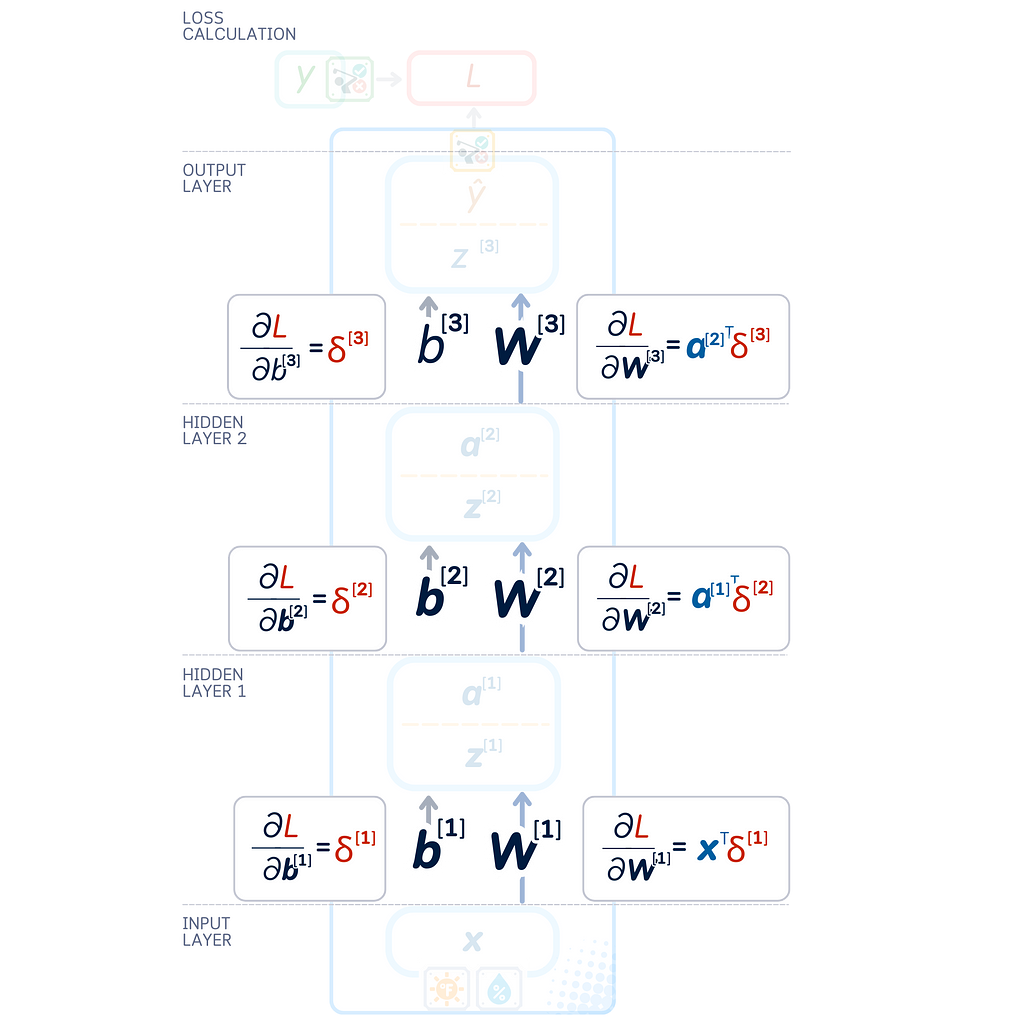

Weight gradients and bias gradients

Using these layer errors and the chain rule, we can express the weight and bias gradients as:

The gradients show us how each value in our network affects our network’s error. We then make small changes to these values to help our network make better predictions

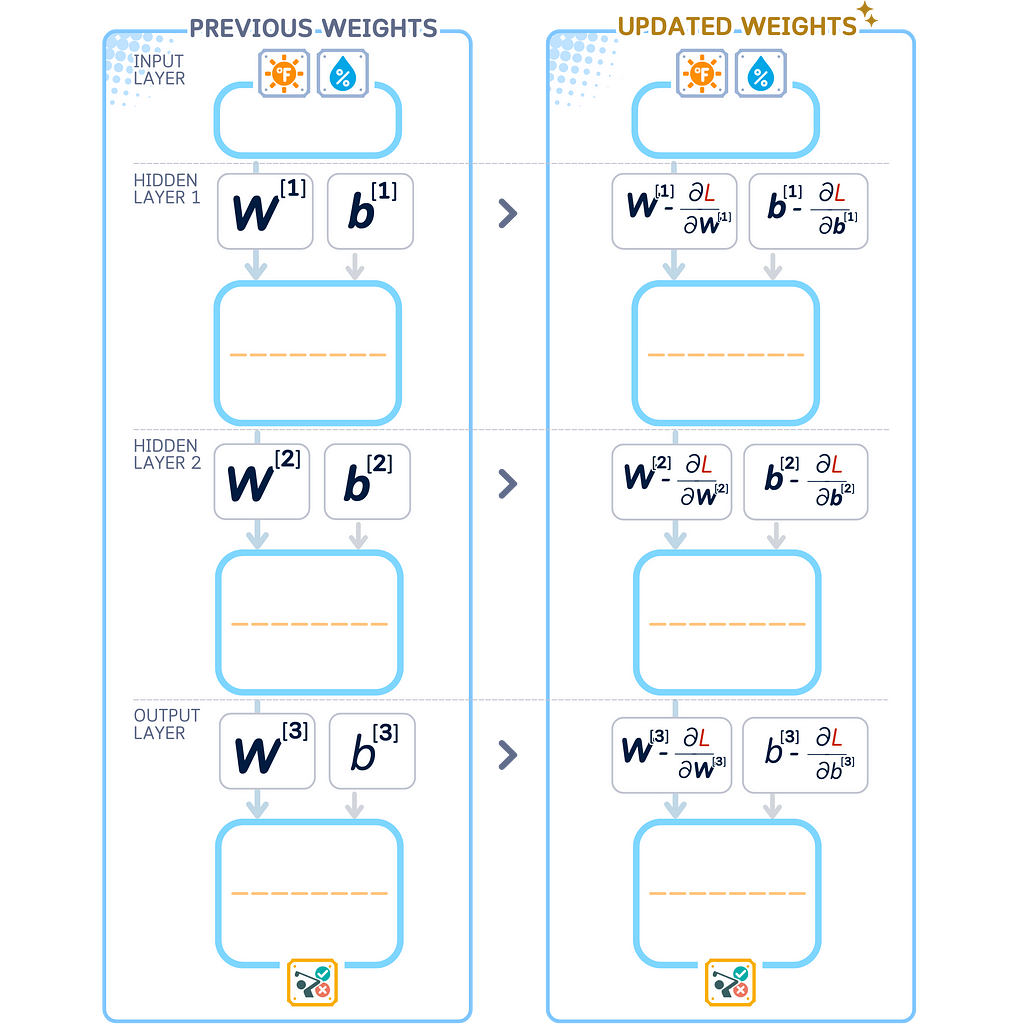

Step 4: Weight Update

Updating weights

Once we know how each weight and bias affects the error (the gradients), we improve our network by adjusting these values in the opposite direction of their gradients. This reduces the network’s error step by step.

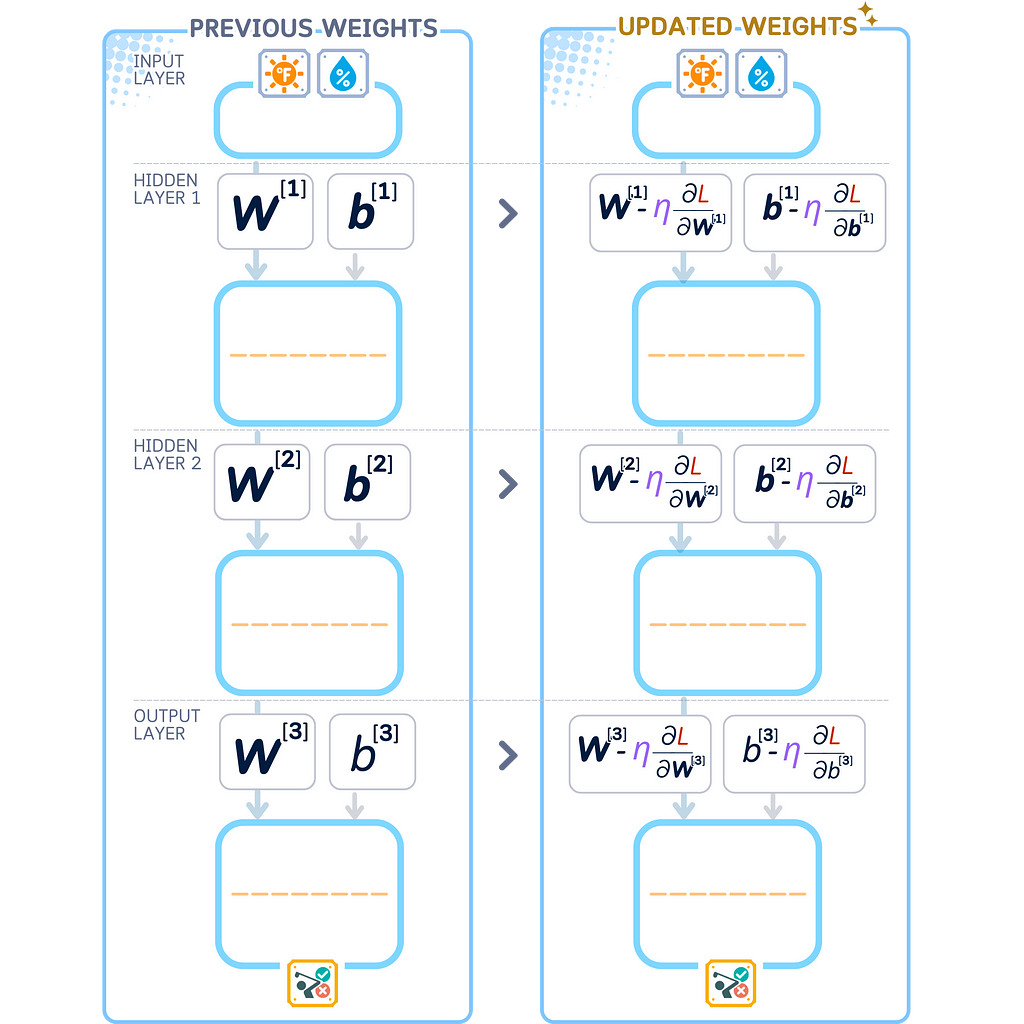

Learning Rate and Optimization

Instead of making big changes all at once, we make small, careful adjustments. We use a number called the learning rate (η) to control how much we change each value:

- If η is too big: The changes are too large and we might make things worse

- If η is too small: The changes are tiny and it takes too long to improve

This way of making small, controlled changes is called Stochastic Gradient Descent (SGD). We can write it as:

We just saw how our network learns from one example. The network repeats all these steps for each example in our dataset, getting better with each round of practice

Summary of Steps

Here are all the steps we covered to train our network on a single example:

Scaling to Full Datasets

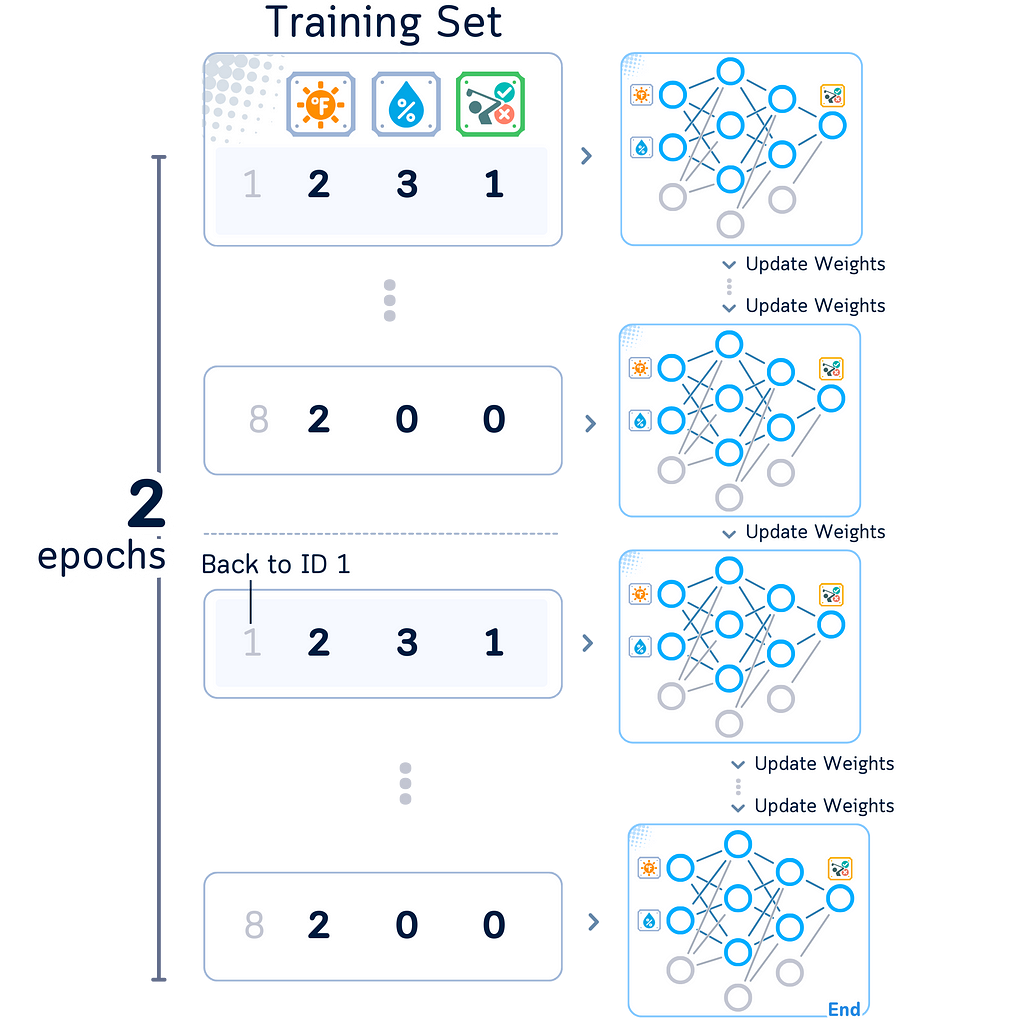

Epoch

Our network repeats these four steps — forward pass, loss calculation, backpropagation, and weight updates — for every example in our dataset. Going through all examples once is called an epoch.

The network usually needs to see all examples many times to get good at its task, even up to 1000 times. Each time through helps it learn the patterns better.

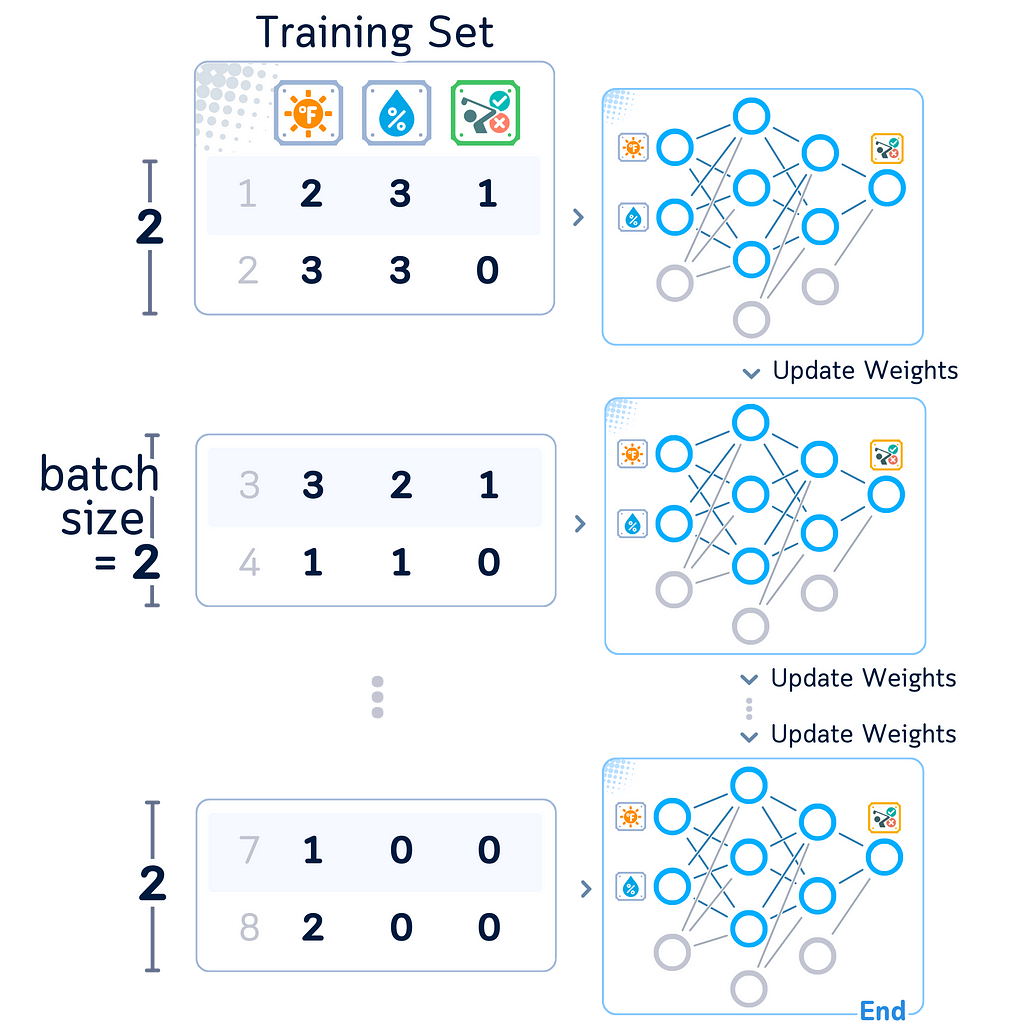

Batch

Instead of learning from one example at a time, our network learns from small groups of examples (called batches) at once. This has several benefits:

- Works faster

- Learns better patterns

- Makes steadier improvements

When working with batches, the network looks at all examples in the group before making changes. This gives better results than changing values after each single example.

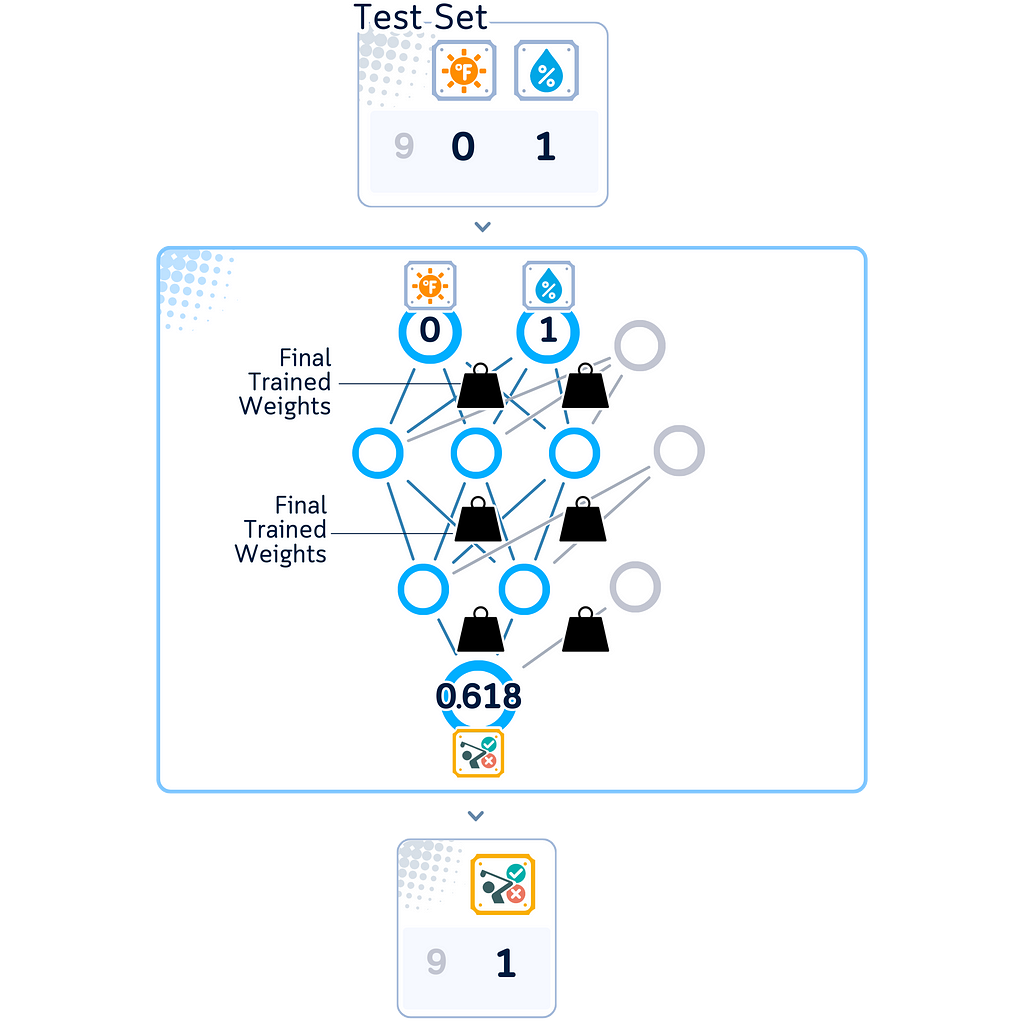

Testing Step

Preparing Fully-trained Neural Network

After training is done, our network is ready to make predictions on new examples it hasn’t seen before. It uses the same steps as training, but only needs to move forward through the network to make predictions.

Making Predictions

When processing new data:

1. Input layer takes in the new values

2. At each layer:

· Multiplies by weights and adds biases

· Applies the activation function

3. Output layer generates predictions (e.g., probabilities between 0 and 1 for binary classification)

Deterministic Nature of Neural Network

When our network sees the same input twice, it will give the same answer both times (as long as we haven’t changed its weights and biases). The network’s ability to handle new examples comes from its training, not from any randomness in making predictions.

Final Remarks

As our network practices with the examples again and again, it gets better at its task. It makes fewer mistakes over time, and its predictions get more accurate. This is how neural networks learn: look at examples, find mistakes, make small improvements, and repeat!

???? Multilayer Perceptron Classifier Code Summary

Now let’s see our neural network in action. Here’s some Python code that builds the network we’ve been talking about, using the same structure and rules we just learned.

import pandas as pd

import numpy as np

from sklearn.neural_network import MLPClassifier

from sklearn.metrics import accuracy_score

# Create our simple 2D dataset

df = pd.DataFrame({

'????': [0, 1, 1, 2, 3, 3, 2, 3, 0, 0, 1, 2, 3],

'????': [0, 0, 1, 0, 1, 2, 3, 3, 1, 2, 3, 2, 1],

'y': [1, -1, -1, -1, 1, 1, 1, -1, -1, -1, 1, 1, 1]

}, index=range(1, 14))

# Split into training and test sets

train_df, test_df = df.iloc[:8].copy(), df.iloc[8:].copy()

X_train, y_train = train_df[['????', '????']], train_df['y']

X_test, y_test = test_df[['????', '????']], test_df['y']

# Create and configure our neural network

mlp = MLPClassifier(

hidden_layer_sizes=(3, 2), # Creates a 2-3-2-1 architecture as discussed

activation='relu', # ReLU activation for hidden layers

solver='sgd', # Stochastic Gradient Descent optimizer

learning_rate_init=0.1, # Step size for weight updates

max_iter=1000, # Maximum number of epochs

momentum=0, # Disable momentum for pure SGD as discussed

random_state=42 # For reproducible results

)

# Train the model

mlp.fit(X_train, y_train)

# Make predictions and evaluate

y_pred = mlp.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print(f"Accuracy: {accuracy:.2f}")

Want to Learn More?

- Check out scikit-learn’s official documentation of MLPClassifier for more details and how to use it

- This article uses Python 3.7 and scikit-learn 1.5, but the core ideas work with other versions too

Image Attribution

All diagrams and technical illustrations in this article were created by the author using licensed design elements from Canva Pro under their commercial license terms.

Multilayer Perceptron, Explained: A Visual Guide with Mini 2D Dataset was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.