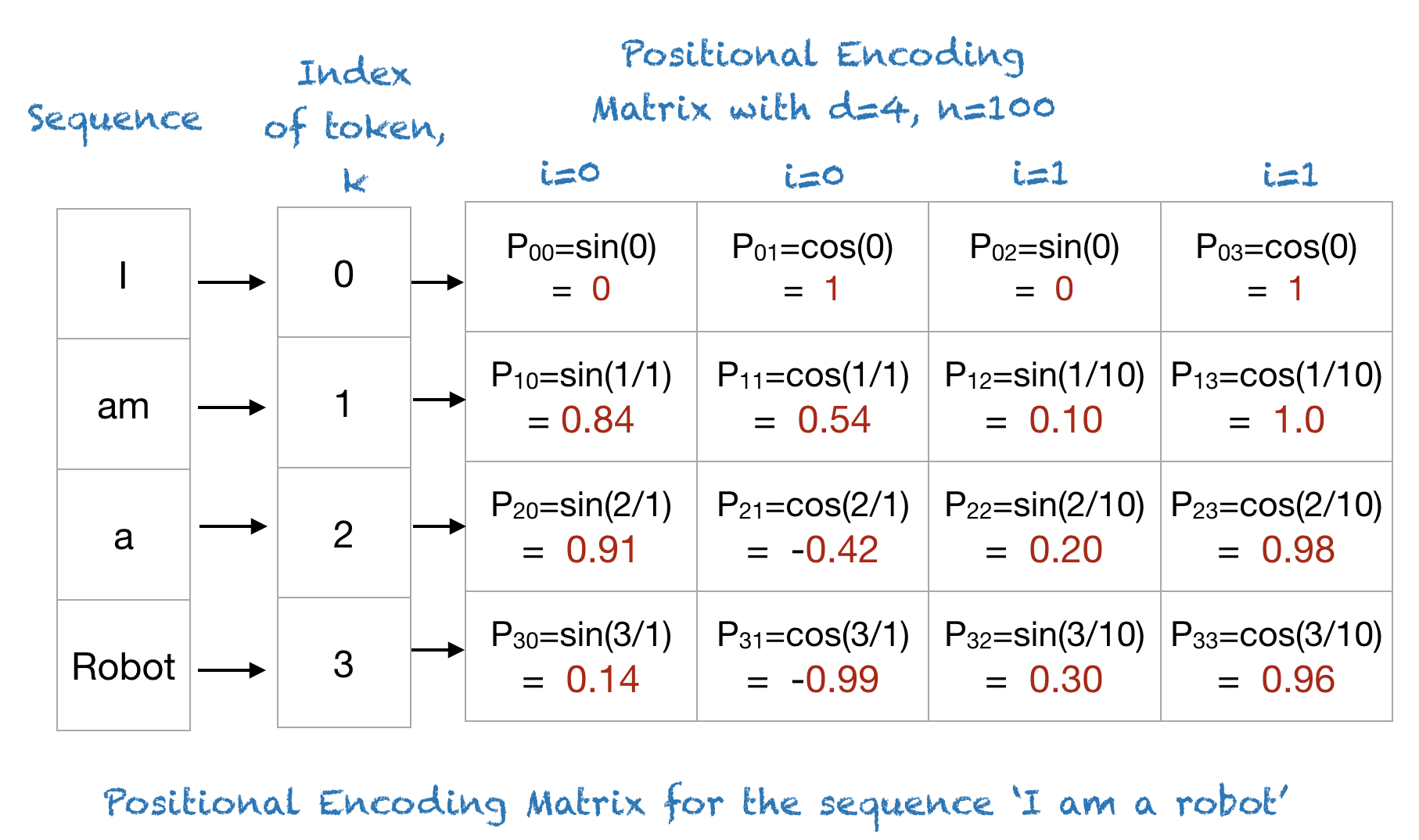

Decoding Positional Encoding: The Secret Sauce Behind Transformer Models

Positional encoding is a critical component of the Transformer architecture. Unlike recurrent models (RNNs or LSTMs), which process…Continue reading on Medium »

Positional encoding is a critical component of the Transformer architecture. Unlike recurrent models (RNNs or LSTMs), which process…