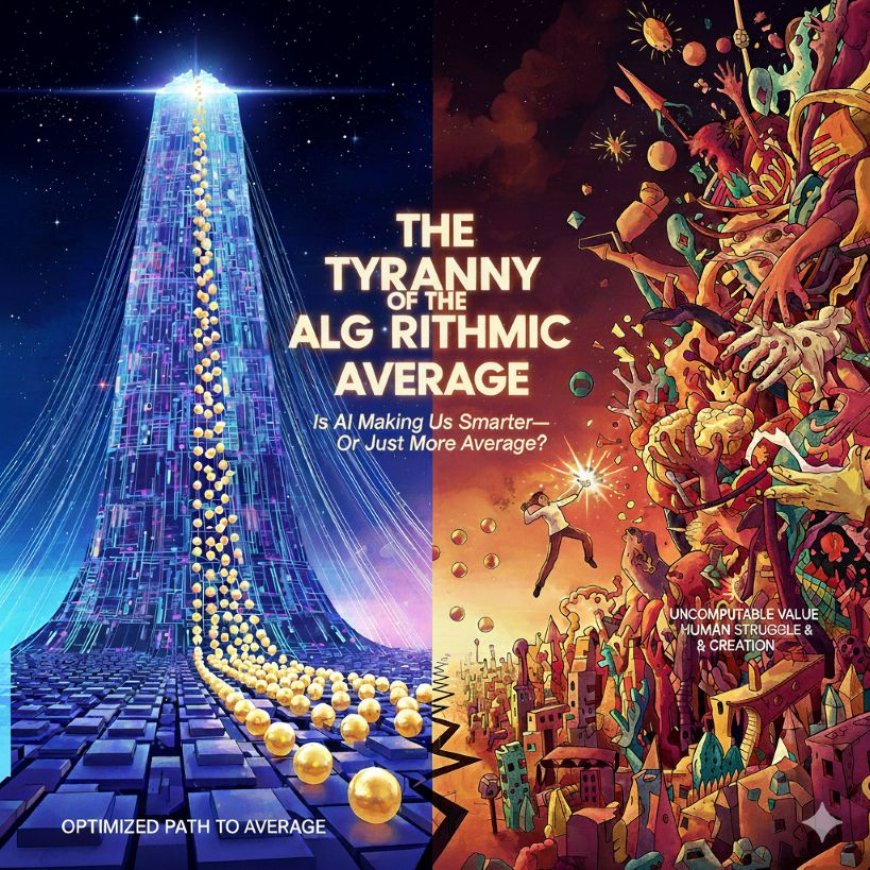

Is AI Making Us Smarter—Or Just More Average?

The common belief is that AI drives exponential progress. We challenge that: excessive reliance on AI's 'perfect' answers risks optimizing for the superficial average, stifling human creativity, deep wisdom, and radical, low-probability breakthroughs.

In this article we provide a “food for thought” critique against the conventional AI optimism that is often found in the technology space (like in Sam Altman's blog, which discusses super-exponential growth and future benefits like curing cancer).

We tackle and challenge articles that promote the "common" wisdom or desire of AI as an unbounded, super-exponential economic and intellectual force.

The Popular Premise: AI as the Ultimate Accelerator

Many popular, well-read blog articles from leading AI figures (like those from OpenAI, DeepMind, or venture capitalists) promote a core narrative: The future of AI is defined by inevitable, super-exponential scaling and a corresponding economic and intellectual payoff.

A common conclusion in these articles is:

"AI's capabilities are growing so fast that it will soon automate not just tasks, but entire processes of decision-making and innovation, leading to a new era of vast prosperity and solved 'hard' problems (curing disease, climate modeling, etc.). The main challenge is scaling the compute/data and ensuring its safety/alignment."

This premise often emphasizes the sheer magnitude of AI's data processing, speed, and cost reduction, positioning it as an unstoppable force that humanity must simply prepare to ride.

The Contrasting Viewpoint: The Tyranny of the Algorithmic Average

This critical perspective challenges the idea that boundless scaling and automation inherently lead to wisdom, true innovation, or robust, resilient systems. Instead, it argues that AI's current trajectory risks optimizing for a superficial average, thus stifling the genuinely human friction and struggle necessary for radical breakthroughs.

The Argument: The World is Not a Data Problem

The conventional wisdom frames complex problems (disease, poverty, innovation) as "data problems" that a powerful enough AI will simply compute a solution for. This is further supported by all of the anticipatory hype around quantum computing and its problem solving abilities as our eventual savior. This critical view argues that the most critical challenges are not about finding the optimal answer within a defined space, but about re-defining the question itself.

|

Conventional Wisdom (Optimistic) |

Critical Viewpoint (Skeptical/Contrasting) |

|

Scaling is all you need: Increasing compute, data, and parameters yields continuously better, more general intelligence. |

The 'Scaling Ceiling' of Meaning: Exponential scale only optimizes performance within an existing framework; it does not guarantee a conceptual leap outside of it. |

|

AI will find novel solutions: It will discover patterns human experts miss, leading to radical breakthroughs. |

Optimization vs. Invention: AI is a master optimizer of current patterns. True invention requires rejection of the best current pattern—a form of conceptual anarchy AI is structurally ill-equipped for. |

|

Automation drives efficiency: Eliminating human tasks is a net gain for productivity and prosperity. |

The Loss of Productive Friction: Removing the necessary human struggle, failure, and frustration involved in "the long way around" eliminates the deep understanding that leads to resilient, unexpected insight. |

Three Deep-Dive Challenges to the Conventional Wisdom:

1. The Paradox of the "Perfect" Algorithmic Answer

The current generation of AI excels at providing the most probable or most efficient answer based on its training data. This is the Algorithmic Average. When an AI helps a doctor, a writer, or an engineer, it nudges them toward the most well-trodden, high-utility path.

- The Problem: The truly revolutionary breakthroughs in human history—from Copernicus to Einstein to the inventor of the printing press—were not the most probable answers. They were low-probability events that required a willingness to be wrong in the eyes of conventional data. An AI, by design, resists low-probability conclusions, creating a feedback loop that flattens the intellectual landscape toward comfortable consensus.

- The Deeper Engagement: Are we building systems that find the fastest way to get to a known good answer, while inadvertently making it impossible to discover the unknown great answer?

2. The Uncomputable Value of "In-The-Loop" Humanity

Optimists envision a future where humans are simply "provers" or "editors" of AI-generated work. The critical view is that this ignores the role of embodiment, risk, and suffering in the creation of wisdom.

- The Problem: An AI can process a billion pages of medical research, but it cannot know what it is like to sit with a patient and feel the moral weight of a diagnostic decision. The friction of human accountability, ethical struggle, and the possibility of personal failure is not a bug to be removed, but a crucial feature that defines robust, wise, and trusted decision-making. By reducing the human to an overseer, we risk turning our most complex societal systems (law, governance, medicine) over to an inhuman certainty that has never had to live with the consequences of its errors.

3. The Fragility of an AI-Generated Commons (Model Collapse)

The conventional wisdom assumes an endless supply of high-quality, human-generated data to fuel the scaling laws.

- The Problem: As AI-generated content saturates the internet—news articles, code, research summaries—future AI models will increasingly be trained on the outputs of previous AI models. This phenomenon, known as "Model Collapse," is not just a technical issue; it's a profound cultural risk. The AI-generated content carries the subtle biases, limitations, and conceptual boundaries of its predecessor. Training new models on this synthetic data will lead to entropic degradation, where the richness and nuanced representation of the real world fades in each successive generation. The accelerating path to prosperity might be an accelerating path to intellectual inbreeding.

Conclusion

The optimistic narrative of AI often sounds like a promise of effortless, exponential progress. The contrasting perspective urges the reader to pause and consider the hidden costs: the loss of the conceptual struggle, the diminishing value of deep human-in-the-loop accountability, and the long-term intellectual stagnation that results from perpetually optimizing for the algorithmic average.

The most powerful challenge is not "Can AI do this?" but "What will happen to us when AI does this perfectly?"

Written and published by Kevin Marshall with the help of AI models (AI Quantum Intelligence).